Updated to include Dr. Mann’s words.

—————————-

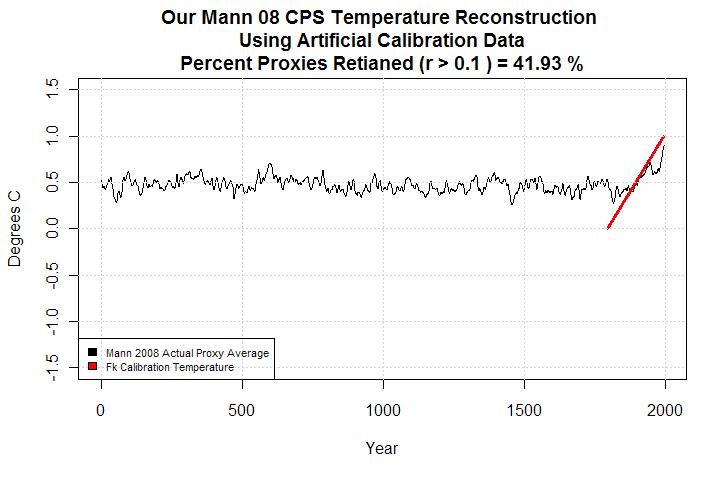

The last time I did this, R was a brand new language to me. After 6 months messing around in my free time I speak rudimentary R with a C accent. R is a totally free language that anyone can download and learn. This post is a demonstration of the methods behind the Mann08 CPS hockey stick reconstruction. The difference between this and the numerous posts I did before is better R programming and a lot more comments in the code. If you’re serious about understanding vs advocating, you can figure this out. There is not one person I can think of who has ever commented here, incapable of figuring this out.

CPS is composite plus scale, which is an invented method for calibrating proxies to measured temperatures in paleoclimatology reconstructions. In paleoclimatology methods are too often invented to find the signal in the noise – this is not a new problem and it stems from the large signal to noise ration of paleo-data. If you happen to be a paleoclimatologist who does temperature reconstructions, please try your methods on ARIMA data with a known signal before employing it on whatever your proxy is.

I have hundreds of new readers, who didn’t get the day by day experience of my discovery of climatology math. Well some of my early work was a little rough, however it was correct and the specifics still stand uncriticized.

This post was prompted by some people in blogland (despite the complete lack of rational criticism) claiming that my demonstrations of the CPS hockey stick math is faulty rather than the actual hockey stick itself. An oddly reversed situation which could only exist in the new progressive anti-world. Actually, I don’t recall any real criticism of the method or the result other than statements around the AGW crowd that – ‘it’s been proven wrong’.

I’ve cleaned up the code and seriously over-commented it in the hopes that honest people will be able to understand what is going on here. I’ll do a short explanation for it but the primary explanation is in the programming code. To understand it fully you should to read it step by step and run it.

Michael Mann knows full well that this result exists, his explanation is as follows from an RC thread.

Actually, this line of attack is even more disingenuous and/or ill-informed than that. Obviously, if one generates enough red noise surrogate time series (especially when the “redness” is inappropriately inflated, as is often done by the charlatans who make this argument), one can eventually match any target arbitrarily closely.

You can note that this post uses his Actual data rather than red noise data. Dr. Mann’s continued explanation is here.

What this specious line of attack neglects (intentionally, one can safely conclude) is that a screening regression requires independent cross-validation to guard against the selection of false predictors. If a close statistical relationship when training a statistical model arises by chance, as would be the case in such a scenario, then the resulting statistical model will fail when used to make out-of-sample predictions over an independent test period not used in the training of the model. That’s precisely what any serious researchers in this field test for when evaluating the skillfulness of a statistical reconstruction based on any sort of screening regression approach.

So a potentially overstated statistical check is what determines if CPS works. This is in fact false, CPS is incapable of recovering an accurate signal from data. A fact which I will demonstrate in these next few posts. This post however, does not address the statistical validation issue, it does however demonstrate that Dr. Mann is correct that any signal at all can be made using Mann08 math and data. A truth for many of his hockey stick creation methods.

An explanation of Composite Plus Scale (CPS).

Proxy Data

The proxy data is noisy, the noise in proxies comes from other factors than temperature. Tree ring widths are a primary proxy in the Mann08 reconstruction and don’t only grow wider (and narrower) from temperature they also grow according to humidity, bugs, lightning, soil condition, sunlight CO2 and numerous other factors. This means that tree ring widths are naturally noisy data. The same is true for mollusk shells, interpretations of historic documents and a pile of other proxies.

Correlation and deletion.

In engineering, when you have noisy data you might just take an average or an area weighted average and hope you have enough data to make it work. This makes sense but you may choose to try other techniques to extract a signal based on foreknowledge of what created the noise, filtering, PCA, ICA and such. In this case with tree rings and mixed odd proxies, there is very little that can be done which can improve on averaging and filtering but whatever you do you don’t want to use a biased method for signal hunting.

If you are using a known biased signal extracting method as an engineer, and you want your product to actually work, you had better test it. I demonstrate here that Mann 08’s CPS method can pull any signal it wants from noisy proxy data. The CPS method which is likely only used in climatology, naturally distorts an artificial signal in the data but besides that it extracts the best match for whatever shape you wish to find.

Mann starts the process prior to CPS and measures correlation (likeness) of the proxy data to instrumental temperature data and deletes the proxies that don’t follow the measured temperature curve. This is very important, only a few of proxies (Luterbacher) used are demonstrated to be in any way related to temperature and they weren’t really proxies, but rather contain actual temperature data. Really a hard situation to imagine for an engineer.

Of the 1,209 proxy records in the full dataset, 484 (40%) pass the temperature-screening process over the full (1850–1995) calibration interval – Mann08

So we have noisy data which may or may not be related to temperature and in the case of 71 Luterbacher proxies is temperature data and to sort it we’re going to apply Mannian CPS math to find the hoped for signal hidden in the noise.

Step 1

Measure correlation between the most recent years of proxy data and the most recent years of temperature data. Throw away everyting that doesn’t at least weakly correlate.

– this step in Mann08 deleted 725 of 1209 proxys.

Step 2

All proxies are measured in their own units (i.e. millimeters or parts per million) so you need to recenter (change the mean of) each proxy in the calibration range to match the mean of the measured data.

Step 3

Rescale each proxy so that the standard deviation of the accepted proxies from step 1 match the standard deviation of the calibration signal. Standard deviation is basically how much a graph wiggles up and down around the average. So this matches the amplitude of the wiggles of something which may or may not be temperature to the amplitude of the wiggles of the measured temperature.

Step 4

Take the average.

———

Sounds simple, when I read about step 1 I actually didn’t sleep that night. Throw away the data that doesn’t fit your pre-determined conclusion of a match to temperature. Really, I was so mad I didn’t sleep, remember we’re paying big $$ for this stuff and we must not pretend it’s somehow ok. NOT good.

After the rescaling operation which makes the best possible fit of each proxy to the temperature signal and averaging, the calibration range signal is always found.

Here are some of the signals I was able to extract from Mann08 actual proxy data using their own methods.

And just to really drive the point home.

Now I’ve shown that before, but the real point of all this is the code. I’ve cleaned it up and commented the code very thoroughly. It is fully turnkey and anyone interested can put it into R copy it into the script window and run it. Thanks to Steve McIntyre for writing the download (a long time ago) and hosting the data.

Now this is part 1 of the demonstration. Part 2 will uses synthetic ARMA data to demonstrate the distortion of any signal in the data by this method. That post is now available at this link: Historic Hockey Stick – Pt 2 Shock and Recovery

===============================================================

DOWNLOAD – R – HERE — http://www.r-project.org/

DOWNLOAD CODE mann-cps-demo THIS DOWNLOAD AVOIDS FORMATTING PROBLEMS FROM WORDPRESS FOR R

All you need to do is copy and paste from the word document above.

Code is also here below reformatted by wordpress:

##TEMPERATURE CORRELATION

#this calculates statlist with 6 items in list###########################################################################

##################### TURNKEY DATA DOWNLOADER #############################

###########################################################################

#Mann PRoxy Data and Info

method=”online”

if (method==”offline”) {load(“d:/climate/data/mann08/mann.tab”)

load(“d:/climate/data/mann08/mannorig.tab”)

load(“d:/climate/data/mann08/idorig.tab”)

names(mannorig)=idorig$idorig

load(“d:/climate/data/mann08/details.tab”)

source(“d:/climate/scripts/mann.2008/instrumental.txt”)} else {

url=”http://www.climateaudit.info/data/mann.2008″

download.file(file.path(url,”mann.tab”),”temp.dat”,mode=”wb”);load(“temp.dat”)

download.file(file.path(url,”mannorig.tab”),”temp.dat”,mode=”wb”);load(“temp.dat”)

download.file(file.path(url,”details.tab”),”temp.dat”,mode=”wb”);load(“temp.dat”)

download.file(file.path(url,”idorig.tab”),”temp.dat”,mode=”wb”);load(“temp.dat”)

names(mannorig)=idorig$idorig

source(“http://www.climateaudit.info/scripts/mann.2008/instrumental.txt”)}

############################################################################

################ FUNCTIONS ################################################

#################################################################################### CREATE TIME SERIES ##########

gg = function(X)

{

ts(X[,2],start=X[1,1])

}######## GAUSSIAN FILTER FUNCTION TESTED AT CA #########

######## set to 11 years ###############################

source(“http://www.climateaudit.info/scripts/utilities.txt”)ff=function(x)

{

filter.combine.pad(x,truncated.gauss.weights(11) )[,2]

}

##########################

##### END FUNCTIONS ######

##########################library(gplots)

use0=”pairwise.complete.obs”#variable names

###########################################################################

########### mannorig is 1357 infilled series,,, ###########################

########### mann is the 1209 series after RegEM infilling #################

###########################################################################

####### THIS SECTION LETS YOU USE INFILLED OR NON-INFILLED DATA #####

#######try either of these for fun. comment out EITHER LINE#######mano=mannorig #copy non-infilled PROXY series to different memory

#mano=mann #copy REGEM infilled PROXY series to different memory#####################################################################

#####################################################################lent=length(mano) ##lent = number of series chosen above

#filter all series using gaussian CA filter

for(i in 1:lent)

{

mano[[i]][,2]=ff(mano[[i]][,2])

}#apply functions to look for change values in this section

#change values here and run from here down only#############################################################################################

#############################################################################################

# MANN O8 MATH ALLOWS YOU TO FIND WHATEVER PATTERN YOU WANT IN PROXY DATA

# THIS SECTION GENERATES DATA REPRESENTING WHAT YOU WANT TO FIND

# I’VE PROVIDED SEVERAL POSSIBLE FUNCTIONS YOU CAN CHOOSE FROM BY DELETING THE ‘#’ SIGNS

# I’VE ALSO PROVIDED A SECTION BY WHICH YOU CAN SET THE PARAMETERS FOR EACH FUNCTION

# YOU CAN MAKE YOUR OWN POSITIVE AND NEGATIVE HOCKEY STICKS FROM THE ACTUAL PROXY DATA

# FOLLOW THE INSTRUCTIONS AND YOU CAN DO IT YOURSELF

###################################################################################################### CHANGE THESE VALUES TO DETERMINE THE CALIBRATION PERIOD

######### THESE VALUES ARE EQUIVALENT TO GROUND TEMPERATURES IN M08

######### INSTEAD OF TEMPERATURES WE CAN LOOK FOR ANYTHING AND STILL FIND IT

######### “CALIBRATION PERIOD” DETERMINES THE FUNCTION TIME PERIOD YOU ARE LOOKING FOR IN YEARS

######### APPROPRIATE VALUES ARE INCLUDED IN THE REMARKS AS SUGGESTIONS FOR EACH LINE

#########

######### AFTER TOP IS LOADED RUN FROM HERE DOWN

endyr=1995 #end year for function correlation 100-2000 (INTEGER)

leng=200 #lenght of function to correlate in years 50 – 1000 (INTEGER)

cycles=4 #cycles of sine wave (ANY)

#################################### FUNCTIONS CHOOSE ONE BY COMMENTING OUT OTHER 3 WITH ‘#’

################## TRY THESE AND MAKE YOUR OWN — ‘FAKE CALIBRATION TEMPERATURE DATA’

#m=c(1:leng)/-leng ###### 0 to -1 NEGATIVE linear slope

#m=c(1:leng)/leng ###### 0 to 1 POSITIVE linear slope

#m=sin( (1:leng)/(leng/cycles)*pi) #sin wave with # cycles

m=-sin( (1:leng)/(leng/cycles)*pi) #-sin wave with # cycles

###############################################################################################################

# THIS LINE DETERMINES HOW WELL EACH INDIVIDUAL PROXY MUST CORRELATE TO THE FUNCTION

# CORRELATION IS ALWAYS BETWEEN 0 AND 1

# CORRELATION VALUES GREATER THAN THIS NUMBER ARE ACCEPTED

# MANN 08 USED 0.1 HIGHER NUMBERS MEAN LESS PROXIES ACCEPTED AND BETTER FINAL FIT

# LOWER NUMBERS MEAN MORE ACCEPTED AND WORSE FINAL FITr=.3 #sorting correlation criteria -1 TO 1 POSITIVE VALUES MAKE MORE SENSE

#############################################################################################

#############################################################################################

#############################################################################################leng=length(m) ## RECALCULATES leng SO THAT PEOPLE WHO MESS AROUND WITH THE FUNCTIONS ABOVE DON’T HAVE TROUBLE

m=ts(m,endyr-leng) ## CONVERT FAKE CALIBRATION TEMPERATURE DATA TO TIME SERIES STARTING AT endyr MINUS LENTcorv = array(0,lent) #set corv AS A VECTOR OF LENGTH EQUAL TO NUMBERR OF PROXIES

stat = rep(NA,lent) #INITIALIZE STAT VECTOR, THANKS ROMAN M

for(i in 1:lent)## CYCLE BETWEEN { } ONE TIME FOR EACH PROXY

{

y=gg(mano[[i]]) ## assign y as a timeseries version of each individual proxy

y=ts.union(m,y) ## make a union – two column proxy of the fake temperature function and the individual proxy

val = window(y, start(m)[1],end=end(m)[1])

stat[i]=cor(val,use=use0)[1,2]

}pass= stat > r # BUILD VECTOR OF PASS FAILS, ONE ELEMENT FOR EACH PROXY .. TRUE == GREATER THAN r

#############################################################################################

# NOW WE HAVE SELECTED OUR PROXIES BASED ON HIGHEST CORRELATION TO THE CHOSEN

# ‘FAKE CALIBRATION TEMP DATA’

#############################################################################################

#############################################################################################

#######NOW WE CALCULATE THE MEAN AND STANDARD DEVIATION FOR EACH PROXY AND SUBTRACT AND DIVIDE

#######THIS IS THE BUSINESS PORTION OF MANN 08 CPS – COMPOSITE PLUS SCALE

#######calculate SD for sorting function m — used in CPS to match standard

#######deviation and offset of all proxies to the function sd and offset

#############################################################################################

#############################################################################################msig=mean (m,na.rm=T) ## CALCULATE THE MEAN OF FAKE CALIBRATION TEMP FUNCTION

ssig=sd(m,na.rm=T) ## CALCULATE THE STANDARD DEVIATON OF FAKE CALIBRATION TEMP FUNCTION#clear values

y=NULL;

x=NULL;

Z=NULLfor(j in 1:lent)#LOOP THROUGH ALL PROXIES

{

if(pass[j]==TRUE) #ONLY USE PROXIES THAT PASS CORRELATION REMEMBER pass IS A TRUE/FALSE FOR EACH PROXY

{

y=(gg(mano[[j]])) #MAKE Y A TIME SERIES FOR EACH PROXY

val = window(y, start(m)[1],end=end(m)[1]) #TRUNCATE TIME SERIES TO YEARS WHERE FAKE CALIBRATION TEMP FUNCTION EXISTS

#CPS USES ONLY CALIBRATION RANGE TO SCALE AND OFFSET PROXY

#THIS DETAIL IS THE PRIMARY POINT WHERE IT GOES WRONG

m0=mean(val,na.rm=T)# CALCULATE MEAN OF PROXY SERIES IN FAKE CALIBRATION TEMP YEAR RANGE

sd0=sd(val,na.rm=T) # CALCULATE STANDARD DEVIATION OF PROXY SERIES IN FAKE CALIBRATION TEMP YEAR RANGE

################# CPS METHOD IS APPLIED HERE #######################

yscale=y-m0 #CENTER TEMP PROXIES BY SUBTRACTING MEAN OF PROXY IN FK CAL TEMP RANGE

yscale=yscale/sd0 #SCALE INDIVIDUAL PROXY BY DIVIDING SD OF PROXY IN FK CAL TEMP RANGE – more wiggles stronger division

yscale=yscale*ssig #SCALE INDIVIDUAL PROXY BY MULTIPLYING BY SD OF FK CAL TEMP – this scales the proxies to match the wiggles of fk cal temp data

yscale=yscale+msig #CENTER INDIVIDUAL PROXY BY ADDING TO OFFSET MATCH FK CAL TEMPx=ts.union(x,ts (yscale,time(y)[1])) #ADDS EACH INDIVIDUAL PROXY INTO ONE BIG MATRIX – EACH TIME THROUGH ONE MORE IS ADDED

}

}adrecon = window(x,start=0,end=1995) #A COUPLE OF PROXIES EXTEND FAR IN THE PAST SO USE YEARS 0 to 1995 TO MATCH MANN 08

Z=rowMeans(adrecon,na.rm=TRUE) #AVERAGE ALL REMAINING SCALED SERIES YEARS TOGETHER PROXIES ARE IN COLUMNS BY YEARS AT THIS POINT SO AVERAGE ROWS

Z=ts(Z,start=0) #MAKE Z INTO A TIME SERIES STARTING AT TIME 0AD###plot###

tit=paste(“Our Mann 08 CPS Temperature Reconstruction \nUsing Artificial Calibration Data\nPercent Proxies Retained (r >”,r,”) =”,round(sum(pass)/length(pass)*100,2),”%” )

plot(Z,type=”l”,ylim=c(-1.5,1.5),main = tit,xlab=”Year”, ylab=”Degrees C”)

lines(m,col=”red”,lwd=3)

grid()

smartlegend(x=”left”,y=”bottom”,inset=0,fill=1:2,legend=c(“Mann 2008 Actual Proxy Average”,”Fk Calibration Temperature”) ,cex=.7)

#savePlot(“c:/agw/r=0_3 NEG SINE”,type=”jpg”)

[snip] Run the code before you criticize tco. You will not dominate this thread with uninformed commentary.

Put comment 2 back. It is a relavent comment on the concepts.

==================================================

REPLY: I don’t know which is comment 2 but I found the latest one standard troll propaganda going on about Steve and Ross being of low credibility.

You are wrong TCO, study if you want to be right. You will not take over this thread with bullcrap.

jeffid – Thank you for that clear explanation.

If all this made you that mad, then I hesitate to even post 😉 Just to be clear, I am an AGW skepic.

However, is it not reasonable that a proxy ***should*** correlate with the instrumental record? To me, if one didn’t, then I would suspect it isn’t measuring temperature and throw it out, just as in the method you use. Now I see how one can feed random noise into this program and come up with the same shape in the instrumental period. This seems to be a trivial and harmless result because the procedure does select for just those shapes. But the point of the exercise is to identify proxies that actually correlate with temperature and it seems this technique should do that. It does not guarantee that a given proxy does in fact measure temperature, but is seems to me to be a reasonable screen since we cannot convert a given proxy to temperature X, which would be desirable. If a proxy fits the instrumental record, then its hind-cast has a better chance of being correct.

If your point is that proxies should not be used unless they can be directly converted to temperature, that is a different angle on it.

On second thought, since Mann uses a suspect instrumental record, the result is also suspect. Therefore, the technique is reasonably sound, just not 100% sure.

It would be nice if we had UAH data from the past 200 years.

#2 If you sort your proxies by correlation, you get whatever you want. Not temperature data.

I don’t find this point too subtle but the upside down thermometer wasn’t very subtle to me either. The anger comes from the fact that peer review allows this sort of thing as a standard practice in climatology and we will sell our economy away based on ‘unprecedented’ warming.

You are absoulutely correct that proxies should correlate to temperature, but in proxies you get noise- lots of noise. Since they are proxies with massive noise, correlation is not a reasonable method for deletion. What happens is similar noise in the calibration period is overlaid repeatedly until the signal you want to see is very clear. In the meantime the historic noise averages to zero leaving a net distorted temperature curve.

This is why people have published papers and blogs to demonstrate that signal-less noise can also be used to the same result.

That’s for the next posts however. If you don’t want to wait for the cleaned up result, I have several posts at the link on the top of the Air Vent on this.

Jeff ID, thanks for running off TCO so that perhaps you can keep a clear channel for anyone who might want to comment and critique constructively on what you have presented here.

I have not run through your code at this point but what comes clearly through your exposition of your sensitivity analysis is that the direction of the overall trend of the reconstruction is very sensitive to the selection criteria. Your explanation aimed at the layman view appears clear to me.

I think if there is common thread running through many of the papers by scientists who are also AGW advocates it is that they tend to work with data that can be selected (cherry picked) rather arbitrarily to obtain “favorable” results. Seldom do you see them doing meaningful sensitivity studies to counter the cherry picking suspicions. That would appear to be left to others. Counter criticism of your analysis here, I think, would have to take into consideration and understand the nature of your analysis and that you are doing sensitivity testing because the data can be rather arbitrarily selected.

I have a couple of questions that come to mind about the proxies from which the 40% were selected by Mann. Were all the proxies in the original proxy selection hat considered by their authors who published them to be proxies for past temperatures and if so what does that say about Mann deselecting them? It appears, that as r is increased at relatively lower values, the % retention of “valid” proxies falls off rapidly. While I have problems personally with correlations with very low r values, one would wonder what some intermediate r values that selected say 20 and 30% of the original proxies would look like in the overall reconstructions. But, of course, I have the code and data to determine that myself – and selfishly the need to learn more R and statistical methods.

4 Queen Victoria’s space program didn’t work out. Shame indeed.

Jeff,

Just a little quibble, but it’s “retained” not “retianed”. Don’t give the trolls any excuse to dismiss you.

I’ve taken a brief look at R, and need to set aside some time to learn it. I was a physics/math major in college (decades ago), and recently got my Masters in CS. It’s time for me to polish up my mad skilz and apply some brain cells to the techniques. I remember enough math to understand the basics, and am highly skeptical of AGW. I’d like to be more than a member of the peanut gallery, though.

Keep up the good work.

One more question, Jeff. Could you give more details on the connection of the red calibration part of the reconstructions in your graphs to what you say in the comment excerpted from your introduction above? I think I understand but I am not certain.

The red line is an arbitrary signal we are trying to find. It is analogous to the instrumental temperature in Mann08.

Jeff: Maybe the Steve/Ross stuff was standard (although correct) TCO fault finding. However, you also snipped a very relevant issue analysis:

To wit, that no one is surprised at either the concept of calibration and selection or the danger of data mining. So that to show such a trivial example is not an “aha”. Instead, you need to engage at a more sophisticated level, to drive some understanding.

REPLY: I’m interested in everyone knowing how this was done. My thought is that once smart people see it they will realize how fabricated this was. I’ve provided complete turnkey code and a simple explanation. If you need a deeper understanding, run the code — I believe I said that above.

Once you figure it out, perhaps you can write the paper to a deeper understanding for me.

With that proxy source data and the instrument record, how should a temperature reconstruction be done? If you use proxies that do not correlate with temperature, I’d be upset about it. We’ve also seen him use ones that have a negative correlation with temperature in the calibration period, but he flips them upside down. Is that done here?

No flipping of data here. If you want to see the signal, you pre-choose which things are proxies and average them. The signal you get will be a combination of all factors, then you can confirm your result.

If temperature is blended in to water and everything else, you cannot sort it out with correlation. You need to find new proxies – the answer becomes:

I don’t know what the temperature was.

MikeN “If you use proxies that do not correlate with temperature, I’d be upset about it.”

I am confused. If I have samples from 100 trees and 30 of them correlate to my temperature records, does that tell me anything? What is the difference between my tree samples and a series of dice rolls? Absolutely nothing.

The first thing that I learned in my statistics class was that a random sample was required. Picking samples that corresponded to a correlation to test for a correlation is beyond laughable.

#14 “The first thing that I learned in my statistics class was that a random sample was required.”

That’s how I got banned from RC, by pointing out that you can’t choose your data according to what you want to find in your data. After that I came back to the air vent thinking why would they ban a comment like that.

My conclusion was the same as yours:

“is beyond laughable.”

It truly is beyond, IMO it’s intentional – and a bit insane that it goes through peer review by people who are alleged to be PhD’s. They must have collectively skipped climatology stats class in favor of climatology finger painting that semester.

Nice script, Jeff!

One small correction to the Word file version of it. After the line:

corv = array(0,lent) #set corv AS A VECTOR OF LENGTH EQUAL TO NUMBERR OF PROXIES

insert the line:

stat = rep(NA,lent)

The vector “stat” seems to be uninitialized.

I wonder what other things can be reconstructed with it? 😉

Jeff – I just had a WTF moment in looking at the code. You search for the exact same signal in all proxies. I don’t know Mann’s code – but does he really just use the global temperature to sort and calibrate the proxies instead of the temperature at the proxy location???

#17, Mann uses gridded temperatures. For those of you who want all the detail, Steve has a complete version of this reconstruction on CA.

#16 Thanks Roman, I found that last night and initialized it one time forgetting to insert it.

Mann08 used gridded temp. MBH98 implicitly used global temp.

I fixed the line in the code and the download document (which has much better formatting)

#18 Ah, okay. So at least one part of paleo reconstructions makes sense . . . 🙂

BTW, if I had ever done anything like the paleo stuff where I work and tried to pass it off as legitimate, I would have been fired long ago.

Jeff, by done here, I meant in Mann’s paper, though I guess it would apply here as well. Does this reconstruction by Mann use upside down temperatures? Does the correlation function validate proxies that are inversely correlated?

#14 Genghis, OK I think I see the point if these are multiple samples from the same type of proxy. However is that the case? Is this 1200 different trees, or 1200 different studies?

There are individual trees and multiple studies used. Everything he could get his hands on with an upslope. The proxies were actually used in both orientations in some cases.

See CA for Tiljander proxies, to learn about one example. This was part of my response to upside down thermometers.

“Does the correlation function validate proxies that are inversely correlated?”

– r gives a negative 1 in this case so no.

Negatively correlated proxies should be allowed if they are in the direction of expected result. Also there are probably some proxies where it’s a little indeterminate whether temp hurts or helps them.

TCO said “Negatively correlated proxies should be allowed if they are in the direction of expected result.”

If I have proxies that indicate the opposite of what I expect, I should be able to reverse them and get the results I want?

Tell me you are a comedian TCO : ) Or better yet you are trying to defeat the AGW theory by advocating it absurdly.

Perhaps I am misusing terminology, but it looks to me that #23 and #24 contradict each other. Let me ask again:

We have seen Mann use upside-down proxies. For example in the calibration period, temperature goes up when the proxy value goes down, and then when the proxy value goes down in the 1400s, that us used to represent lower temperature.

Can this happen with this methodology? The proxy and temperature are correlated, but inversely.

#25 Genghis, I think TCO is saying that if you have a proxy whose value goes down when temperature goes up, it is OK to use it, as long as your reconstruction uses smaller proxy values to mean higher temperatures.

#27 If you are asking if it worked in Michael Manns methodology, it was done manually leading to tiljander. A very few proxies were flipped- under 5 or something from memory. I was tempted to say 2 but I’m not certain.

In the example above, the proxies are not flipped.

#28, I also gave TCO the benefit of the doubt on that one.

Mike that would be a direct correlation, I have no problem with that at all. I reverse proxy would be like a metal that shrinks as it is cooled. If it is negatively correlated it would indicate heating instead of cooling.

Jeff, I remain unclear as to exactly what the arbitrary or “fake” calibrations involve. Are you saying that those series are completely synthetic with no realationship to the real world with the exception that they have to give a correlation, over the limit of the r criteria selected, with that part of the reconstruction data that overlaps the calibration period?

If I have captured essentially what you are doing then I must ask how is using “fake” calibrations a demonstration of sensitivity? Are there arbitrary and alternative selections of instrumental data that were available to Mann et al (2008) that I am not aware?

OK, so it sounds like after one has selected proxies based on the temperature record of choice, then one should examine the set of data points at time X, or a small range of time, and determine:

1. Is the set distributed in the shape of a normal distribution, a bi-modal distribution, etc.?

2. If the points represent a normal or close-to-normal distribution, then include only those that fall within 1(?) or 2(?) SD of the mean and use those for the reconstruction.

On second thought, again, finding the average and 1 SD wouldn’t work. One would have to take the selected proxies and see which of correlate with each other and select the majority that do correlate with each other over the entire time period.

Ken #31

They are arbitrary functions designed to show that we can find any function we want to in the data by elimination of data we don’t agree with. It’s not a matter of the instrumental data having alternative choice but rather a matter of alternate proxies as a choice. I did it once with instrumental global data upside right and upside down also, same result as here.

We can place a negative slope in and determine using the same criteria that temperature has dropped. The upslope is therefore an artifact of the math choosing proxies with a similar noise shape rather than actually existing in the signal itself. In unsorted areas the noise cancels out being equally positive and negative, this nearly guaranteed unprecedented ‘whatever you’re looking for’.

“Also there are probably some proxies where it’s a little indeterminate whether temp hurts or helps them.”

TCO – What does this mean? “probably” “a little” “indeterminate” “hurts” “helps” – what data and techniques are available to illuminate the validity or otherwise of the proxies you refer to? By what criteria do you define what qualifies something as a proxy?

Arghh. Again with the R code. I tried noodling around with it one day when I was home sick. As someone coming from C++/C#/Python programming I really didn’t like it as a language. Does it get better when you are familiar with it?

Also, to use proxies that are inversely related, wouldn’t you need some kind of plausible physical basis? Otherwise, it’s all Rorschack patterns. I’m hoping that’s what #25 means by the “expected” result, and not correlation to observations.

I thought there was some sort of two stage selection process in the Mann paper. It would seem too obvious that you can get the last period to look like whatever you want by picking proxies that have that shape during the period. Thus if all the earlier periods are essentially random you get a flat line up to the calibration period where it will look like the calibration data.

Retained

Unprecedented

#37 Yup. It looks like a shock and recovery to zero in every Mannian reconstruction. This is the most simple version. How can a guy who run’s RegEM not understand what’s happening?

#36, A bit better. You can’t single step and see values. Matlab is much better but both are slow. The big advantage in R is the ability to handle linear algebra and plot so effortlessly. Can you imagine the work required to C++ an antarctic plot. Sure you can do it, but resolving lat/lon and land coord with different types of projections. R makes that much nicer.

Matt Y. said

June 20, 2009 at 4:38 pm

Yes. Unfortunately, for many proxies the physical explanation is often inconsistent within the population itself, e.g., tree rings. Non-lineariites probably drive this (which also implies that linear signal extraction methods are woefully inadequate for the task).

Mark

If a class of proxy had a “natural” orientation, then it doesn’t matter whether the correlation is negative or positive – as long as you use it consistently. I think that some people are arguing against a trivialization of this point that no one is advocating.

For example, maybe speleos agree that speleothem dO18 or something like that is negatively correlated to temperature. Then you can’t ex post flip them around depending on 20th century correlations to HadCRU gridcells. Tiljander published her series with one orientation, saying that the 20th century portion was contaminated by building local bridges or something like that. But the correlation of the contaminated data with temperature caused the data to be flipped over in Mann’s algorithm.

Ex post screening accomplishes something similar to ex post flipping, though you need more series to accomplish the same result. Mann uses very large networks. Unfortunately, his methodology does not result in an averaging out of noise but simply a larger selection of data to be mined.

Getting serious for a second now, I discussed this issue (trend matching of a single century) with Zorita (who is the real deal, boys and girls). He made the common sense point that what you need is a validation that does some wiggle matching. For instance coral looks extremely responsive. Trees are all over the place. Either you need WAY more tree data to overcome signal to noise…or better proxies that are more coral like.

Note, though, that it’s interesting to hear Jeff dis trend matching here wrt these proxies and then want it in Antarctica. Taps foot…

REPLY: I would be interested to hear the Q and A on this one.

Jeff, please, assume that we are biding time here until we can get those who have been through the data and methods to make their comments. I complain about TCO not doing his homework and the same goes for me – and I need to know when I am wasting bandwidth.

I agree and appreciate that adding an instrumental record onto an otherwise white noise proxy reconstruction proves almost nothing about the validity of a reconstruction and particularly when the calibration part of the reconstruction can be arbitrary. We can know we have an instrumental upturn in temperature trends but adding that onto the end of a reconstruction that does not correlate temperature with the proxy measures would produce a hockey stick (like the Penguins used to beat the Red Wings). I may be confused from the use of CPS and thinking in terms of regression models.

You use synthetic calibration data with an “up” or “down” final trend and the reconstruction part remains flat. You change the r criteria when you go from the “up” calibration (r greater than 0.1) to the “down” calibration (r greater than 0.3) and you lose a good portion of the proxies that no longer qualify. Why change the r criteria and not leave it at r greater than 0.1 or 0.3? How much of the change in percentage of the qualifying proxies due to the change in r criteria or the change from using “up” synthetic calibration data to using “down” synthetic calibration data?

The last 2 graphs look the same in the calibration period but different in the reconstruction period and both use r greater than 0.3 for a selection criteria? It also appears that the sine calibration data changes the shape of the reconstruction part of the curve.

#41 – I suppose one must have some reason to believe tree rings are actually a proxy for temperature. There are so many variables that affect tree growth, it could be that tree rings are not reliable proxies for temperature. Without a “standard candle,” i.e. something we know for sure is a proxy for temperature to which to compare a candidate proxy, how can we be sure that a given proxy is representative of temperature?

Jeff, now I’m confused gain. Steve says the algorithm is flipping proxies upside-down, and you say Mann did it manually.

Let’s say I have a proxy that looks like a negative hockey stick. Then I would want the algorithm to flip this proxy upside down when I average it in, correct?

Now I have one that goes 3s all the way thru 1700, goes up to 4.5 by 1800, then decreases steadily to 4. Again I would want the algorithm to flip this. My understanding is that Mann’s algorithm didn’t flip it, but instead averaged it in as is, and it’s ok because the last part correlates with temperature.

Mike N, IMO it’s not helpful to construct toy examples. Think in terms of populations. IF you use white spruce ring widths or whatever as a proxy for temperature,. then you should know the orientation from prior analyses. Then you are obliged to not only to use that orientation but all your samples. You can’t pick and choose after the fact as Mann does.

Nor can you opportunistically flip orientations. To show just how opportunistic Mann’s algorithm was – he did “early calibration” and “late calibration” experiments. We discovered that his algorithm used one series in one orientation in early calibraiton and the opposite orientation in late calibration.

Surely this is a reductio ad absurdum of the method.

Jeff:

A. Please release post 40.

B. The Zorita conversation was on CA a few years ago. Was in the comments and I’m not sure which thread.

C. All y’all should be careful about what Steve claims he’s proved about flipping. For one thing all the proof and investigation has been on his blog, not in accessible well-written technical papers. I don’t think he’s done any kind of thoughtful lit search on the issue and a real investigation of when a bit more mining is warranted and when physical effects should be a pre-requisite (and given this is a simple issue, would warrent there is some literature and some subtleties here…) [snip]

VOG: [snip]!

“The real deal”

Hehe…real what exactly?…

42 (RC-like Voice of God reply):

Here is a link to the Zorita discussion. See especially posts 23 on.

http://www.climateaudit.org/?p=673

Jeff – I installed R, but it appears to be a command-line version. Is there a graphical user interface version? I use Ubuntu.

#41 At least according to De’ath, coral ain’t so good as a proxy.

This is sort of on topic.

Here’s a cool graph showing precipitation as measured by bristlecone pine tree rings in the White Mountains of California (red) and instrumentation for the same area (blue) from 1930 to about 1995:

I’ve always thought that if the Hockey team was measuring anything accurately, it was much more likely that they were measuring precipitation rather than temperature. This graph seems to suggest the possibility of just that.

What particularly caught my eye, is the significant drop in precipitation beginning around 1983 and continuing on to the end of the graph, combined with a corresponding decline in tree ring widths for the same time period. It’s interesting to me that this time period roughly coincides with the much talked about divergence of tree ring and temp data that also occurs during this time period.

I may be way off in my thinking, but it’s worth a look to compare this graph with the original MBH98 graph (I believe tree ring data stopped around 1980 in the MBH graph) and the subsequent graphs showing the divergence more comopletely.

RE: #49

comopletely?????????????????????? completely

Almost made it through an entire post without a typo.

53. The “much talked about divergence” is NOT with respect to bcps. It’s with respect to northern forests.

Thanks for this post. I never understood before exactly why the hockey stick was BS. It’s actually amazingly simple! Averaged noisy data gives a flat line! DUH! In light of this I can’t believe that it’s still defended over at RC and other places on the internet. But I guess the average AGWer for ya.

My big problem here is summed up by the old saw “correlation is not causation”:

While I like the idea of at least correlating to regional climate, it’s not bloody obvious that the real link between tree ring size and climate is not only temperature, but also other variables such as annual rainfall, fertilization effects, average sunlight and so forth.

What makes this even more complex is that in general the effects of these variables on tree-ring growth rate are not independent. Hot and dry is as bad as cold and wet for tree ring growth for most trees.

What this means in practice is that tree ring growth throughout the year is very nonuniform and usually concentrated around the periods in time for which there is simultaneously the appropriate warmth, amount of sunlight and moisture. Thus, for tree-ring proxies in cold climates, likely you are principally sampling only the summer months.

How does this help with annual temperature? The answer is it really doesn’t, because there are atmospheric and sea surface oscillations that can lead to more violent extremes in temperature with little net shift in annual average temperature. Even worse it is entirely possible to have the proxies correlate with temperature over one climate period, and anti-correlate over another, just due to differences in corresponding rainfall patterns etc.

In an ideal world you construct informed parametric models for your proxies such as tree-ring growth, you calibrate them for when you have good data, and then you perform (to the extent it is possible) simultaneous reconstructions of all the independent variables that your proxies depend on.

On the positive side, at least they’ve moved away from “teleconnections” now….

RE: #53 TCO, “The “much talked about divergence” is NOT with respect to bcps. It’s with respect to northern forests.”

I think you missed the point of the post. Did you look at the graph I linked? The red line in the graph is precipitation amount reconstructed from the bristlecone tree ring widths. Does the red line in that graph look anything to you like the nearly straight line GMT temp increases from 1978 to the late 90’s?

A width is a width is a width.

#45 Steve, I’m trying to understand the algorithm. That’s why I used the toy example.

Is it the algorithm itself that is flipping the proxy, or did Mann do this manually?

If it is the algorithm, then presumably that would happen with Jeff’s examples too.

Thepage48: I’m aware of what you posted, but the “much-TALKED ABOUT divergence issue” is northern forests, NOT bcps. I have had to correct this mistake with another bull-headed skeptic before. We went through a couple weeks of looking at review papers, before he agreed.

#57 MikeN The algorithm Mann used had a flag you could set to allow negative correlation. If the flag was set and the correlation was negative the proxy was flipped by the algorithm, however the flag which allows the flip was set manually. It is essentially a manual flip. This happened in a very very few of 1209 original proxies.

It doesn’t happen in the example above, in the example above we loose one or two more proxies because negative correlation isn’t accepted.

I like that you’re considering the detail Mike, if you really want to understand what’s happening deeply I would like to encourage you (and others) to download R and copy the script into it. The whole thing is a lot easier than you might expect and it’s interesting to see line by line what’s happening. Roman just copied the code and ran it, found a problem and corrected it. He likely doesn’t have a single question about how it’s run.

=====================

#49, Jim

R has some screenshots of it’s operation on the homepage. I was surprised by its starkness, it basically is a command line window inside a very limited GUI menu is that what you are seeing?

#58. There are multiple problems with the Mann algorithm and you need to browse older threads here and at CA to get the flavor.

One tree ring incident that was annoying. A subset of tree ring proxies were collected by Peter Brown who’s visited CA to admonish me (unjustly IMO) for supposedly misunderstanding tree ring proxies. Some of his chronologies were used in Mann 2008. They were trees in the US Great Plains with a positive correlation to precipitation and, if anything, were negatively correlated to temperature.

For tree rings (but not speleothems), Mann required that the chronologies have a positive correlation to temperature. He did not take all the chronologies, but mined them. In the Brown subset,they mostly had a negative correlation to temperature. Mann mined the data set retaining only one chronology out of 16 – the one that by happenstance went up.

We challenged Peter Brown and/or Rob Wilson to submit a comment, but neither of them are willing to touch anything that faintly criticizes Mann with a bargepole.

Speleothems were handled differently. They were allowed to have either orientation and as noted above, Mann permitted the same series to be oriented both up and down depending on whether it was “early” or “late” calibration.

In response to the other question, Mann let the algorithm work.

But there is plenty of evidence of manual examination of results in this sense. There are many ad hoc rules and decisions in the extended algorithm that do not derive from any known statistics. From my experience with this corpus, there is often a “reason” for the ad hoc decision though the issues are seldom discussed and the method seemingly appears from outer space. I have never encountered a case in this corpus where the seemingly arbitrary decision has resulted in a lesser hockey stick.

To quote Rabett:

“There’s a hockeystick in every popsicle”

#58 Jeff –

I have to run it from terminal and I get a command line in terminal. I don’t see any gui. I found a front-end for R under development called rkward. I’m playing around with it.

OK, rkward is a bit awkward and crashes a lot. But I downloaded the Word document, cut and pasted into the R command line in terminal. The downloads worked (pretty cool feature!) and some of the code worked. Do you think control characters are getting copied from the Word doc?

> leng=length(m) ## RECALCULATES leng SO THAT PEOPLE WHO MESS AROUND WITH THE FUNCTIONS ABOVE DON’T HAVE TROUBLE

> m=ts(m,endyr-leng) ## CONVERT FAKE CALIBRATION TEMPERATURE DATA TO TIME SERIES STARTING AT endyr MINUS LENT

>

> corv = arr

Error: object “arr” not found

+ sd0=sd(val,na.

+

+ yscale=y-m0#CENTER TEMP PROXI

Error: unexpected symbol in:

”

yscale”

> yscale=yscale/sd0#SCALE INDIVIDUAL PROXY BY DIVIDING SD OF PROXY IN FK CAL TEMP RANGE – more wiggles st

Error: object “yscale” not found

>

> x=ts.union(x,ts (yscale,time(y)[1])) #ADDS EACH INDIVIDUAL PROXY INTO ONE BIG MATRIX – EACH TIME THROUGH ONE

Error in inherits(x, “data.frame”) : object “yscale” not found

> }

Error: unexpected ‘}’ in “}”

> }

Error: unexpected ‘}’ in “}”

>

> adrecon = window(x,start=0,end=1995) #A COUPLE OF PROXIES EXTEND FAR IN THE PAST SO USE YEARS 0 to 1995 TO MATCH MANN 08

Error in attr(x, “tsp”) Z=rowMeans(adrecon,na.rm=TRUE) #AVERAGE ALL REMAINING SCALED SERIES YEARS TOGETHER PROXIES ARE IN COLUMNS BY Y

Error in inherits(x, “data.frame”) : object “adrecon” not found

> Z=ts(Z,start=0) #MAKE Z INTO A TIME SERIES STARTING AT TIME 0AD

Error in ts(Z, start = 0) :

‘ts’ object must have one or more observations

>

That’s tough jim. The value

corv = arr

is truncated from the line which says.

corv = array(0,lent)

I don’t know how to fix it. I’m not familiar with Ubunto or R in Linux. There has to be a gui compatible version which can accept a script.

Jeff,

Is there some reason you didn’t just call it a .r file? Then it could be downloaded and saved in the file system, and opened from the menu in R.

Alternatively Jim, just download the file, don’t open it in word. Right click on the file in the post and save it instead of opening it. Then rename the file extension from .doc to .r and open it from the menu in R.

Jeff,

I would still like to understand how Mann08 early and late calibration relate to your calibration over the entire instrumental period.

#64 WordPress doesn’t let me call it .R and link to it.

#65 Each calibration early and late have the same characteristics. I only showed it once to demonstrate how the blade is made. If you imagine that the average of the noise is nearly a horizontal flat line in the early calibration, you can try the algorithm above with a horizontal flat line to see the effect.

Jeff,

Weird artifact of WordPress, I didn’t have the same issue in blogspot. Then I suggest you put in the instructions that I suggested to download the file and rename it.

Just a test to see if I can link to it here.

I’m sorry your comment about early and late calibration is too obscure for me. I’m not actually familiar with the details of the technique. Did a proxy have to pass two separate calibration screens to be included?

OK, got it to run in Ubuntu. Copied and pasted the text from Word doc to gedit (a text editor in Ubuntu). Set gedit to (using menus) View | Highlight Mode | Scientific | R. Deleted all comments and then moved cursor to the end of each line to be sure there were no apparent spaces at the end of line. If there was a space deleted until cursor rested at end of line. Invoked R in terminal. Copied and pasted text from gedit to terminal. It took a few tries at cleaning to get it right. Also, there appears to be a “;” missing from the Z = null line. It runs with the “;” there at least.

I see my link worked. Perhaps what you mean is that wordpress isn’t willing to let you upload a .r file. I solved that type of problem by creating a free site at weebly that I just store files in and uploading my pdf and .r files there. I just link to them from blogspot.

#67 Not exactly. Mann allows the use of a few of the proxies in one orientation during late and another orientation during early.

In windows, use Notepad rather than Word for saving scripts.

The problem that Jeff has is the same one that I have on my WordPress.com site. When uploading media files to the blog, the only allowed file types are:

jpg, jpeg, png, gif, pdf, doc, ppt, odt, pptx, docx

Even txt files are not allowed so Notepad is not particilarly useful. Thus, our only options for posting R documents are Word, Office or pdfs. Finding alternate free hosting sites means losing some control on the files.

Can’t some settings be thrown into word to take out the formatting issues?

If you are running Ubuntu then you have access to open office. It its not already installed you can use synaptic to get it. If you open the word doc in open office then you can save it as a text file. from the R command line prompt you type source(“filename.txt”) and hit return. And yes if you have spent your life writing C programs then R is hard to stomach. Its pretty ugly.

#75 I agree it’s not the prettiest. It’s very crude compared to matlab also. I did allmost all of my previous hockey stick work in C which worked fine and was fast for me to write despite the hundreds and hundreds of code lines. It was also at least 100 times faster for running time, it’s just as easy to create 1,000,000 artificial proxies as it is to create 10000. R is also memory limited.

C however prevented the sharing of the more complex calculations that R can do in a single line. So by redoing this with R, the calculations are more clear and nobody has to question whether I did my matrix multiplication correctly.

Gawd, I wish it had break points though.

#76

I have an interpreted language that I use for my finance stuff. It is set up R like but the syntax is much less clumsy. Things like matrix multiplication in all flavors are just callable routines and since the interpreter can support arbitrary base data types everything looks nice.

so i can say something like.

A = matread(“filename.a”, numrows, numcols);

B = matread(“filename.b”,numrows,numcols);

C = matmul(A,B);

When you want to add a new function in the language you fill out some table which defines its name, the types of input parameters and what it returns. So the line that defines matmul is something like:

matmul T_MATRIX ts_matrix_multiply T_MATRIX T_MATRIX

this says to define a function whose name is matmul that is linked to the C routine ts_matrix_multiply and takes two arguments which are matrixes. If you try to pass matmul a matrix and a series the interpreter complains. You can also overload function names so the same name will operate on differing inputs. So if you had another definition of matmul which took three args it could be defined in the table as

matmul T_MATRIX ts_matrix_multiplythree T_MATRIX T_MATRIX T_MATRIX

then you could say in the language

C = matmul(A,B)

D = matmul(A,B,C)

To get the lattitude averaged temps that I posted upthread the following code ran.

sattemp = matread(“sattemp.dat”, 300, 5509);

satlon = matread(“satlon.dat”, 1, 5509);

satlat = matread(“satlat.dat”, 1, 5509);

highlat= -90.0;

delta = -3;

totalcells = 0;

for (i = 0; i = highlat) and (latgood < highlat – delta);

numgood = rowsum(latgood);

lattemp = sattemp * latgood;

avtemp = rowsum(lattemp) / numgood;

rsl = slope(avtemp, 300);

slp = rsl[299];

avg = ssum(avtemp) / 300;

printf("highlat = %3.0f lowlat = %3.0f numcells = %3.0f avg = %6.3f slope = %6.5f\n", highlat, highlat – delta, numgood[0], avg, slp);

highlat = highlat – delta;

totalcells = totalcells + numgood[0];

}

printf("Total Cells = %f\n",totalcells);

If you are interested I could strip out all but the base stuff and tar it up for you. The nice part is that you can run it under gdb if necessary though usually its not. I thought that the R plotting stuff just uses G plot??? If you get good with the language you can start doing finance sims. If you can make good predictions it pays better than climate 🙂

wordpress eats the less than signs so here it is with fortran like encoding 🙂

highlat= -90.0;

delta = -3;

totalcells = 0.0;

for (i = 0; i .lt. 9; i++;)

{

latgood = sattemp – sattemp;

latgood[0,0,0,5508] = satlat[0, 0, 0, 5508];

nozero(latgood);

latgood = (latgood .gte. highlat) and (latgood .lt. highlat – delta);

numgood = rowsum(latgood);

lattemp = sattemp * latgood;

avtemp = rowsum(lattemp) / numgood;

rsl = slope(avtemp, 300);

slp = rsl[299];

avg = ssum(avtemp) / 300;

printf(“highlat = %3.0f lowlat = %3.0f numcells = %3.0f avg = %6.3f slope = %6.5f\n”, highlat, highlat – delta, numgood[0], avg, slp);

highlat = highlat – delta;

totalcells = totalcells + numgood[0];

}

printf(“Total Cells = %f\n”,totalcells);

There are other advantages to R. It has an enormous library of plotting and statistical functions including filtering, fft and piles of unique stuff. It does include gplots too. I’m reluctant to switch to another free software although it is tempting to work only in Matlab.

I’m running only windows now. I messed around with Linux for a couple of years and gave up on it because it didn’t interface easily with engineering boards and cameras. I kept having driver troubles but it was rather unique hardware.

I think that most of the stat functions in R are available in the gnu scientific library. I use it for doing svd’s. I would not dream of trying to rewrite the numerical functions. It’s an artform in its own right that is not at all my area of expertise.

My condolences on Windows. I did battle with microsoft crap for about 10 years and haven’t looked at it in 20. Never again.

It is a definite problem that board manufacturers first build drivers for windows then if they feel like it do something for linux. I had the same experience with cameras. I built a device that was used for helping people watch replays of their golf swing. It had three different cameras and would start and stop and generate replays based upon when the ball was teed up and when it left. It was a lot of fun but yes I had to make it work under DOS cause they had no drivers for linux and wouldn’t give me the hardware specs so i could write one. They were paranoid that I was going to go into competition with them. Maybe that’s why the team won’t release code and data. They are afraid they will be replaced.

#80, The posts are appreciated, however I would like this thread to refocus on the issues related to CPS because these posts are going to replace the old ones on hockey stick temperature distortion and because they are a popular concept and at the top of the blog, many thousands of people will read it over time.

Jeff ID, I have read your thread introduction again to better understand what the CPS method can do to translate the influences of the calibration period to the remainder of the reconstruction. (Your explantion is very straight forward and the problem I had was part of a more general one I have had with other parts of your presentation where I anticipate what what I would have done). Helping these old eyes see the differences in your 4 graphical presentations finally required me to print each of them on a separate sheet of paper. I now see the calibration sine curve differences. An aside on that is that the sine curve imposing an upward trend in temperature had the smallest percentage of proxies meeting the 0.3 r selection criteria.

I was unprepared for the use of a synthetic (fake) calibration curve in a sensitivity test and I think that is why it has taken me longer to see a valid reason for doing it as you have. I am curious whether my explanation below fits your reasoning.

It would appear that by providing synthetic calibration data one can demonstrate that it can dramatically affect the part of the reconstruction that is otherwise unknown, i.e. that part coming before the instrumental period. Using various forms of synthetic data also shows how the r selection process remains capable of selecting fewer but still significant numbers of proxies. In the end, however, what I see by your synthetic data approach is a damning demonstration of the fallacy of using the CPS method as was apparently done in Mann et al. (2008). Your method shows that future temperature anomaly data and its shape (as implied by your synthetic data) in the CPS method application used by Mann can affect the shape of the past anomaly data as it would be represented in the future reconstruction. I do not see any constraints in the methods that would prevent this unphysical occurrence, but I must admit that I have not yet thought this seeming contradiction sufficiently through to a good understanding.

I would still like to see the sensitivity of the reconstruction curve to more levels of the r value selection criteria using both the instrumental record and various synthetic data sets. Maybe you have presented those results in past posts. I can do them with your R code.

#81

I agree. Please snip as required.

#82

I think that it is easier to think of it in more simpler terms.

You have a large basket of random noise time series say 1000 points long.

You look thru the basket and pull out those than have some positive correlation to an upslope in the last 100 time periods. You now take all the selected series and add them together. You will get basically a flat line (average of the random noise = zero) up until time t[900] when the average will start sloping up. If you have a large enough sample of random time series there is NO function that you will not be able to pull out. Complete with a flat tail in the preceding period. So you can produce any unprecedented reconstruction you like all from nothing but uncorrelated random noise.

#82,

You’re close, I believe the demonstration is horribly damming but I really didn’t look much at the historic data, just the ability to create any fit you want in the calibration range. David #83 has it the way I read it. There are some ridiculous proxies in the set which cause the strangeness in history.

Tonight I will have a post which finishes the demonstration (and CPS 😉 ), it uses synthetic ARMA data with a known signal and will demonstrate how well CPS does at extracting the signal. This method allows us to see how even a flat 1 degree signal is distorted in the timeframe of the reconstruction.

As far as different r values, everyone has different thoughts on what they want to see to understand fully. The code is completely turnkey now and I commented the line for correlation in the code so it’s meant to be messed around with. Everything runs pretty fast so I would encourage you to take a shot at it.

This part I understand about any reconstruction and specifically about Mann’s original and its progenitors. It merely needs one to assume that the reconstructed part of the proxy is white to red noise and we could not determine whether the reconstruction was picking out a signal or not. Would that require what Jeff has shown here or simply the assumption of white/red noise giving a result the same as a legitimate proxy/reconstruction would and not being able to distinguish between the two?

What I see in Jeff’s demonstration is that if we give the proxy (or select a proxy with) a large variation in the calibration period the CPS method will “normalize” that variation and translate it to the reconstructed part of the proxy.

85:

Its that the method as shown will be able to pull out from a random set of time series a subset which will match any calibration period and shape as long as you have enough random series to choose from at the start. So yes, you would be unable to distinguish whether or not the original set of proxies were legitimate (as in there is some other physical confirmation of their ‘proxyness’) or just made up coin flips. So as a science project it is useless.

The normalization part is just so you can add dissimilar proxies. Remember, some of the proxies are measured in inches per year, micrograms / decaliter, etc etc. You have to get them all in the same units so the easy way to do this is to divide each series by its own variance in its own units just leaving ‘standard scores’ which are dimensionless so you can add them happily. There are second order complications if the distributions of the measurements in individual proxies are not gaussian but that is so far down in the error term that it is hardly worth talking about.

David_A: from Jeff’s introduction to the threadwe have

I understand the normalization part to convert units. It is the normalization part above to which I was addressing my observation. Look at the large variation in the red calibration synthetic sine data and that seen in the reconstructions that go with those calibrations.

Jeff, I’m gawna deh it, gawna deh it.

This may not be a spot-on topic post, but if you believe the MWP and LIA were global events, it seems the thing to do would be select for proxies that show those events. I think you have amply demonstrated the technique in question will spit out fallacious data. That being said, since I have been reading up on global warming, I never cease to be amazed.

“I think you have amply demonstrated the technique in question will spit out fallacious data.”

I have shown that you will split out any data you want. You could correlate these proxies to the fire-retardence of wrappers on coke cans using CPS.

Re; #89, “I have shown that you will split (sic) out any data you want. You could correlate these proxies to the fire-retardence sicof wrappers on coke cans using CPS.”.

Great line.

I’m happy to report that on the personal front I’ve learned enough about the statistics to at least follow the discussion.

I have a whole slew of questions but not the time to put fingers to keyboard at the moment. Hope I can post my Q’s in part 2.

I always enjoy your posts, Jeff. Keep it up. P

Jeff ID, I have run your code for the synthetic up slope at several levels of r and the sine synthetic for r =0.6 – both with no infilling. What is striking about it for me is that regardless of the numbers of proxies making up the reconstruction (higher r yielding fewer proxies) is that the variations (wiggles)in the reconstructed part stay very close to the same.

The results above are apparent when considering the CPS methods, but as I stated previously it puts an unphysical twist on the past data as it will be affected by the varaition of future temperature variations. I would think in the real world, changing the number of proxies in the reconstruction to the degree that the r selection allows should visually change the wiggles in the reconstructed part of the curve much more than I see.

Jeff, the code ran as advertised. Great job. (I was able to screw it up with my modifications, but readily correct it with all the commented material that you provided.) I did have some run time problems with lower r values that could have been from a Mc Afee interference.

How do I post graphs here or provide links in a post to graphs at Image Shack?

Kenneth,

I don’t have a clue. Someone put images up here about a month or two ago. I don’t know how they did it.

I’m interested in your results (I have probably already done some similar plots), if you put it in imageshack and add links people can see them. I think you get a much better feel for what’s happening when you mess around with the code.

Jeff, I will do the Image Shack link. I just did the r = 0.8 with the postive synthetic calibration data (1% of the proxies retained) and I finally got the reconstructed part of the graph to show increased variation. A rather large (relative to the calibration period) up trend and then down at the left end of the graph immediately after 0 AD.

I made a lower r value run with your code and it locked up my computer again. I need to investigate why, but I would guess it is an error on my part.

Re: #91

Kenneth – you can embed a pic using HTML code if you have the pic hosted somewhere (and wordpress accepts it).

Example:

I haven’t used this stuff in a while. Let’s see if I did it right!

#93, I’m not sure what the problem is with lower values, I’ll try it myself tonight.

When you get to r=0.8 you start looking at a very few proxies so historic variation isn’t dampened by the other random noise.

RE: #94

oooppps. It didn’t take the code or even print it out in the post. Sorry. Thought I could help!

I don’t know why it didn’t work. Word press takes othe HTML.

Oh, well – sorry

RE: #91

Kenneth or Jeff:

How to Embed Images in Comments on WordPress:

http://www.trevorfitzgerald.com/2007/12/wordpress-comment-images/

#97, It won’t work on free wordpress. – We’re high budget here.

I’d like to reserve this thread for CPS and discussion related to that because this thread will be around for a long time.

Jeff, I found that when using infill I can go below r =0.3 without stalling my computer, but with infilling I do not get a time series to plot using r=0.8. I think the higher value r with infill produces no proxies to plot.

>I’d like to reserve this thread for CPS and discussion related to that because this thread will be around for a long time.

Rats. Well I’m leaving this comment anyway. Have you guys reviewed Mannian smoothing, with minimum roughness and minimum slope? It is part of the algorithm in a Rahmstorf paper that is included in the Copenhagen report.

With all these methods, I’m thinking he should perhaps be added to the periodic table of mathematicians, replacing Mersenne.

http://stetson.edu/~efriedma/periodictable/

Jeff ID, I have listed the links to my graphs using your code with various r values below. I retained your “Retianed” signature for proof of authenticity.

I need to do more of my own analyses to get a better feel for the determining the influence that the calibration part of the CPS has on the reconstruction. It’s all in the mathematical manipulation of CPS “normalizing” the means and the standard deviations and if I were a decent mathematician I could readily conceptualize that part.

My computer hangs on the last “for” statement before the plotting step when I attempt to use an r of less than 0.2. I need to figure out why that happens.

#101, Your problem triggered a lightbulb.

This statement

x=ts.union(x,ts (yscale,time(y)[1]))

starts to take a long time when more proxies are used. That’s probably the problem, you can wait it out but it will take a while.

JeffId, Thanks for script. A few basic questions. The data is loaded in temp files correct? Such that as I try it over and over, I don’t create a large disk use for the same data. This script gives 1 graph correct? I get 50 warnings at

+x=ts.union(x,ts (yscale,time(y)[1])) #ADDS EACH INDIVIDUAL PROXY INTO ONE BIG MATRIX – EACH TIME THROUGH ONE MORE IS ADDED

+ }

+ }

There were 50 or more warnings (use warnings() to see the first 50)

Is this expected?

#103 John,

The data is loaded into memory only. If you try it over and over you shouldn’t need to run the top of the script and it won’t use any disk space outside of the R environment.

I don’t recall any warnings except in step two of the process. Can you type warnings and tell me what you see?

Warning messages:

1: In window.default(x, …) : ‘end’ value not changed

2: In window.default(x, …) : ‘end’ value not changed

They are all the same for the first 50.

#105 John,

Those are from the window time statement, its caused by having a time series that ends in 1982 or something and calling window(x,end=1985) It shouldn’t cause any problem.

I just want to make a few brief comments on this post. Essentially I find the claim that using CPS style methods, any shape can be found from actual proxies believed to contain a temperature signal unconvincing. This is partly because you have not in fact followed the Mann et al. 08 procedure. Specifically:

1) Mann et al. 08 requires that proxies satisfy the condition that P < 0.1, ie, that the probability of the proxy arising by chance in the calibration period from white noise be less than 10%. They comment that this is effectively equivalent to p < 0.13 for annually resolved proxies because they have the profile of red, rather than white noise. They further comment that the high number of proxies that passed the screening (around 40%) when only 13% or less would be expected to pass by chance shows the selected proxies to contain a significant signal. This represents a significant test of validity, that the ratio proxies passing the screening be significantly greater than expected by chance, that you have not retained. Of your graphs, only the "significant warming" graph passes this test, with the "unprecedented Sine — waving" graph being ambiguous. The other two graphs definitely fail with insufficient proxies passing the test to accept significance.

2) More importantly, proxies satisfy the P test if they are sufficiently correlated, or anti-correlated with the local temperature record from their location. In effect, Mann et al. screen for either sufficient correlation or anti-correlation, while you only screen for correlation. Applied to red noise, a screen for correlation or anti-correlation will typically produce equal numbers of both types, and hence the mean of screened "proxies" will not vary significantly from the mean of all "proxies", ie, there is no selection for shape.

3) You have still failed to replicate the cross validation mentioned by Mann in the quotes above.

4) Further, Mann et al. distinguish carefully between annually and decadally resolved proxies, and screen against local temperature grid points rather than a single global curve. The later means the proxies are not screened for a single curve, but rather against a variety of different curves which may, or may not share the shape of the global temperature average curve.

Even with these failings, your attempt to screen for particular curves is only partially successful at best. The "Unprecedented sine — waving" completely lacks the first half wave. The inverted sine wave has waves of only half amplitude, with the final half "wave" not descending but rather continuing to rise. It is reasonable to believe that had you employed the full CPS technique, your matches would have been even worse.

I look forward to your follow on blog when you correct for these errors to see how many shapes you can pick out once you do.

Tom,

I’ll try and address some of your concerns. It’s quite a list though and you’ve missed the point.

#1 – You are correct in stating man required p<.1 and they did make some correction for autocorrelation which in reality turned out to be insufficient. I believe Hu McCulloch wrote on this at CA. You are also correct that they passed 40% where you go wrong accidentally is saying it's a significant test of valididity.

This is actually completely separate from the issue discussed here. The purpose of this post was quite simple, show that any signal you want can be extracted from the data and do it directly with Mann's proxies.

He's made several arm waiving criticisms of red noise variants on this method so I did it with his own data and provided the code.

Please don't forget that the 40% came after RegEMing UPSLOPE data on the end of over 90% of the proxies and picking two gridcells rather than one, underestimating the autocorrelation all for selection.

Here's one of my favorites though:

https://noconsensus.wordpress.com/2008/10/11/will-the-real-hockey-stick-please-stand-up/

One graph shows 39% picked r=.1 from a downslope.

#2 We may actually agree on this point. I'm not clear though on the way it's written. Certainly there is selection for shape in the calibration period and this is significant. Also, the shape of the historic signal is significantly distorted from the zero line until it gets far enough away from the calibration period for the red noise to return to within significance of the mean. All of this assumes we sorted for an upslope.

#3 This statement is concerning for any future discussion which I would enjoy. The reason is that this post points out the statistical tests and then explicitly states that there is no attempt to address the cross validation issues at this point. Your comment reads like a troll style attack rather than a reasoned shortcoming that I've missed.

#4 I don't see how using multiple curves or a single curve could rectify the shortcomings of CPS. Again, that is a different issue not addressed here.

The last point which begins with 'even with these failings' – it doesn't matter what curve I use. If there is a set of parameters which somehow proves some effect, you can put them into the R code and show it pretty easily. The section where the curves are made is pretty clearly commented. The half amplitude effects are eventually rectified in CPS by an additional regression of the result on the sine wave. This was unnecessary for this post as my intent was to show people the simplest possible method to get any signal you want from Mann08 data.