I was going to hold off on this post because Dr. Weinstein’s post is getting a lot of attention right now it has been picked up on several blogs and even translated into different languages but this is too good not to post.

Ryan has done something amazing here, no joking. He’s recalibrated the satellite data used in Steig’s Antarctic paper correcting offsets and trends, determined a reasonable number of PC’s for the reconstruction and actually calculated a reasonable trend for the Antarctic with proper cooling and warming distributions – He basically fixed Steig et al. by addressing the very concern I had that AVHRR vs surface station temperature(SST) trends and AVHRR station vs SST correlation were not well related in the Steig paper.

Not only that he demonstrated with a substantial blow the ‘robustness’ of the Steig/Mann method at the same time.

If you’ve followed this discussion whatsoever you’ve got to read this post.

RegEM for this post was originally transported to R by Steve McIntyre, certain versions used are truncated PC by Steve M as well as modified code by Ryan.

=======================

Ryan O – Guest post.

I’m certain that all of the discussion about the Steig paper will eventually become stale unless we begin drawing some concrete conclusions. Does the Steig reconstruction accurately (or even semi-accurately) reflect the 50-year temperature history of Antarctica?

Probably not – and this time, I would like to present proof.

I: SATELLITE CALIBRATION

As some of you may recall, one of the things I had been working on for awhile was attempting to properly calibrate the AVHRR data to the ground data. In doing so, I noted some major problems with NOAA-11 and NOAA-14. I also noted a minor linear decay of NOAA-7, while NOAA-9 just had a simple offset.

But before I was willing to say that there were actually real problems with how Comiso strung the satellites together, I wanted to verify that there was published literature that confirmed the issues I had noted. Some references:

(NOAA-11)

Click to access i1520-0469-59-3-262.pdf

(Drift)

(Ground/Satellite Temperature Comparisons)

Click to access p26_cihlar_rse60.pdf

The references generally confirmed what I had noted by comparing the satellite data to the ground station data: NOAA-7 had a temperature decrease with time, NOAA-9 was fairly linear, and NOAA-11 had a major unexplained offset in 1993.

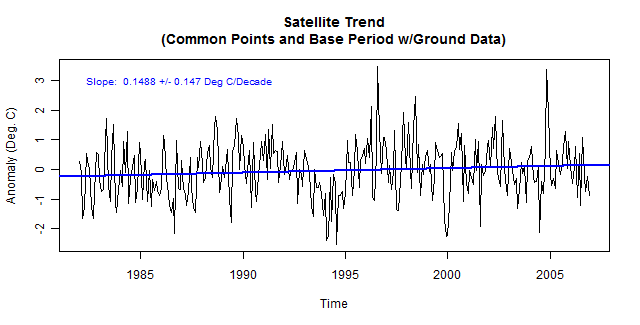

Let us see what this means in terms of differences in trends.

The satellite trend (using only common points between the AVHRR data and the ground data) is double that of the ground trend. While zero is still within the 95% confidence intervals, remember that there are 6 different satellites. So even though the confidence intervals overlap zero, the individual offsets may not.

In order to check the individual offsets, I performed running Wilcoxon and t-tests on the difference between the satellites and ground data using a +/-12 month range. Each point is normalized to the 95% confidence interval. If any point exceeds +/- 1.0, then there is a statistically significant difference between the two data sets.

Note that there are two distinct peaks well beyond the confidence intervals and that both lines spend much greater than 5% of the time outside the limits. There is, without a doubt, a statistically significant difference between the satellite data and the ground data.

As a sidebar, the Wilcoxon test is a non-parametric test. It does not require correction for autocorrelation of the residuals when calculating confidence intervals. The fact that it differs from the t-test results indicates that the residuals are not normally distributed and/or the residuals are not free from correlation. This is why it is important to correct for autocorrelation when using tests that rely on assumptions of normality and uncorrelated residuals. Alternatively, you could simply use non-parametric tests, and though they often have less statistical power, I’ve found the Wilcoxon test to be pretty good for most temperature analyses.

Here’s what the difference plot looks like with the satellite periods shown:

The downward trend during NOAA-7 is apparent, as is the strange drop in NOAA-11. NOAA-14 is visibly too high, and NOAA-16 and -17 display some strange upward spikes. Overall, though, NOAA-16 and -17 do not show a statistically significant difference from the ground data, so no correction was applied to them.

After having confirmed that other researchers had noted similar issues, I felt comfortable in performing a calibration of the AVHRR data to the ground data. The calculated offsets and the resulting Wilcoxon and t-test plot are next:

To make sure that I did not “over-modify” the data, I ran a Steig (3 PC, regpar=3, 42 ground stations) reconstruction. The resulting trend was 0.1079 deg C/decade and the trend maps looked nearly identical to the Steig reconstructions. Therefore, the satellite offsets – while they do produce a greater trend when not corrected – do not seem to have a major impact on the Steig result. This should not be surprising, as most of the temperature rise in Antarctica occurs between 1957 and 1970.

II: PCA

One of the items that we’ve spent a lot of time doing sensitivity analysis is the PCA of the AVHRR data. Between Jeff Id, Jeff C, and myself, we’ve performed somewhere north of 200 reconstructions using different methods and different numbers of retained PCs. Based on that, I believe that we have a pretty good feel for the ranges of values that the reconstructions produce, and we all feel that the 3 PC, regpar=3 solution does not accurately reproduce Antarctic temperatures. Unfortunately, our opinions count for very little. We must have a solid basis for concluding that Steig’s choices were less than optimal – not just opinions.

How many PCs to retain for an analysis has been the subject of much debate in many fields. I will quickly summarize some of the major stopping rules:

1. Kaiser-Guttman: Include all PCs with eigenvalues greater than the average eigenvalue. In this case, this would require retention of 73 PCs.

2. Scree Analysis: Plot the eigenvalues from largest to smallest and take all PCs where the slope of the line visibly ticks up. This is subjective, and in this case it would require the retention of 25 – 50 PCs.

3. Minimum explained variance: Retain PCs until some preset amount of variance has been explained. This preset amount is arbitrary, and different people have selected anywhere from 80-95%. This would justify including as few as 14 PCs and as many as 100.

4. Broken stick analysis: Retain PCs that exceed the theoretical scree plot of random, uncorrelated noise. This yields precisely 11 PCs.

5. Bootstrapped eigenvalue and eigenvalue/eigenvector: Through iterative random sampling of either the PCA matrix or the original data matrix, retain PCs that are statistically different from PCs containing only noise. I have not yet done this for the AVHRR data, though the bootstrap analysis typically yields about the same number (or a slightly greater number) of significant PCs as broken stick.

The first 3 rules are widely criticized for being either subjective or retaining too many PCs. In the Jackson article below, a comparison is made showing that 1, 2, and 3 will select “significant” PCs out of matrices populated entirely with uncorrelated noise. There is no reason to retain noise, and the more PCs you retain, the more difficult and cumbersome the analysis becomes.

The last 2 rules have statistical justification. And, not surprisingly, they are much more effective at distinguishing truly significant PCs from noise. The broken stick analysis typically yields the fewest number of significant PCs, but is normally very comparable to the more robust bootstrap method.

Note that all of these rules would indicate retaining far more than simply 3 PCs. I have included some references:

Click to access North_et_al_1982_EOF_error_MWR.pdf

I have not yet had time to modify a bootstrapping algorithm I found (it was written for a much older version of R), but when I finish that, I will show the bootstrap results. For now, I will simply present the broken stick analysis results.

The broken stick analysis finds 11 significant PCs. PCs 12 and 13 are also very close, and I suspect the bootstrap test will find that they are significant. I chose to retain 13 PCs for the reconstruction to follow.

Without presenting plots for the moment, retaining more than 11 PCs does not end up affecting the results much at all. The trend does drop slightly, but this is due to better resolution on the Peninsula warming. The rest of the continent does not change if additional PCs are added. The only thing that changes is the time it takes to do the reconstruction.

Remember that the purpose of the PCA on the AVHRR data is not to perform factor analysis. The purpose is simply to reduce the size of the data to something that can be computed. The penalty for retaining “too many” – in this case – is simply computational time or the inability for RegEM to converge. The penalty for retaining too few, on the other hand, is a faulty analysis.

I do not see how the choice of 3 PCs can be justified on either practical or theoretical grounds. On the practical side, RegEM works just fine with as many as 25 PCs. On the theoretical side, none of the stopping criteria yield anything close to 3. Not only that, but these are empirical functions. They have no direct physical meaning. Despite claims in Steig et al. to the contrary, they do not relate to physical processes in Antarctica – at least not directly. Therefore, there is no justification for excluding PCs that show significance simply because the other ones “look” like physical processes. This latter bit is a whole other discussion that’s probably post worthy at some point, but I’ll leave it there for now.

III: RegEM

We’ve also spent a great deal of time on RegEM. Steig & Co. used a regpar setting of 3. Was that the “right” setting? They do not present any justification, but that does not necessarily mean the choice is wrong. Fortunately, there is a way to decide.

RegEM works by approximating the actual data with a certain number of principal components and estimating a covariance from which missing data is predicted. Each iteration improves the prediction. In this case (unlike the AVHRR data), selecting too many can be detrimental to the analysis as it can result in over-fitting, spurious correlations between stations and PCs that only represent noise, and retention of the initial infill of zeros. On the other hand, just like the AVHRR data, too few will result in throwing away important information about station and PC covariance.

Figuring out how many PCs (i.e., what regpar setting to use) is a bit trickier because most of the data is missing. Like RegEM itself, this problem needs to be approached iteratively.

The first step was to substitute AVHRR data for station data, calculate the PCs, and perform the broken stick analysis. This yielded 4 or 5 significant PCs. After that, I performed reconstructions with steadily increasing numbers of PCs and performed a broken stick analysis on each one. Once the regpar setting is high enough to begin including insignificant PCs, the broken stick analysis yields the same result every time. The extra PCs show up in the analysis as noise. I first did this using all the AWS and manned stations (minus the open ocean stations).

I ran this all the way up to regpar=20 and the broken stick analysis indicates that 9 PCs are required to properly describe the station covariance. Hence the appropriate regpar setting is 9 if all the manned and AWS stations are used. It is certainly not 3, which is what Steig used for the AWS recon.

I also performed this for the 42 manned stations Steig selected for the main reconstruction. That analysis yielded a regpar setting of 6 – again, not 3.

The conclusion, then, is similar to the AVHRR PC analysis. The selection of regpar=3 does not appear to be justifiable. Additional PCs are necessary to properly describe the covariance.

IV: THE RECONSTRUCTION

So what happens if the satellite offsets are properly accounted for, the correct number of PCs are retained, and the right regpar settings are used? I present the following panel:

(Right side) Reconstruction trends using just the model frame.

RegEM PTTLS does not return the entire best-fit solution (the model frame, or surface). It only returns what the best-fit solution says the missing points are. It retains the original points. When imputing small amounts of data, this is fine. When imputing large amounts of data, it can be argued that the surface is what is important.

RegEM IPCA returns the surface (along with the spliced solution). This allows you to see the entire solution. In my opinion, in this particular case, the reconstruction should be based on the solution, not a partial solution with data tacked on the end. That is akin to doing a linear regression, throwing away the last half of the regression, adding the data back in, and then doing another linear regression on the result to get the trend. The discontinuity between the model and the data causes errors in the computed trend.

Regardless, the verification statistics are computed vs. the model – not the spliced data – and though Steig did not do this for his paper, we can do it ourselves. (I will do this in a later post.) Besides, the trends between the model and the spliced reconstructions are not that different.

Overall trends are 0.071 deg C/decade for the spliced reconstruction and 0.060 deg C/decade for the model frame. This is comparable to Jeff’s reconstructions using just the ground data, and as you can see, the temperature distribution of the model frame is closer to that of the ground stations. This is another indication that the satellites and the ground stations are not measuring exactly the same thing. It is close, but not exact, and splicing PCs derived solely from satellite data on a reconstruction where the only actual temperatures come from ground data is conceptually suspect.

When I ran the same settings in RegEM PTTLS – which only returns a spliced version – I got 0.077 deg C/decade, which checks nicely with RegEM IPCA.

I also did 11 PC, 15 PC, and 20 PC reconstructions. Trends were 0.081, 0.071, and 0.069 for the spliced and 0.072, 0.059, and 0.055 for the model. The reason for the reduction in trend was simply better resolution (less smearing) of the Peninsula warming.

Additionally, I ran reconstructions using just Steig’s station selection. With 13 PCs, this yielded a spliced trend of 0.080 and a model trend of 0.065. I then did one after removing the open-ocean stations, which yielded 0.080 and 0.064.

Note how when the PCs and regpar are properly selected, the inclusion and exclusion of individual stations does not significantly affect the result. The answers are nearly identical whether 98 AWS/manned stations are used, or only 37 manned stations are used. One might be tempted to call this “robust”.

V: THE COUP DE GRACE

Let us assume for a moment that the reconstruction presented above represents the real 50-year temperature history of Antarctica. Whether this is true is immaterial. We will assume it to be true for the moment. If Steig’s method has validity, then, if we substitute the above reconstruction for the raw ground and AVHRR data, his method should return a result that looks similar to the above reconstruction.

Let’s see if that happens.

For the substitution, I took the ground station model frame (which does not have any actual ground data spliced back in) and removed the same exact points that are missing from the real data.

I then took the post-1982 model frame (so the one with the lowest trend) and substituted that for the AVHRR data.

I set the number of PCs equal to 3.

I set regpar equal to 3 in PTTLS.

I let it rip.

Look familiar?

Overall trend: 0.102 deg C/decade.

Remember that the input data had a trend of 0.060 deg C/decade, showed cooling on the Ross and Weddel ice shelves, showed cooling near the pole, and showed a maximum trend in the Peninsula.

If “robust” means the same answer pops out of a fancy computer algorithm regardless of what the input data is, then I guess Antarctic warming is, indeed, “robust”.

———————————————

Code for the above post is HERE.

I’m amazed at how well Fig 10 matches RegEM to surface station data.

Outsanding. And a very clear presentation.

Am I understanding correctly that the truncated PCA form of the calculation is the ‘model frame’ version since it replaces the original data as well? – Modified of course according to your 1,2,3 … PC method?

#1 Thanks for the glowing intro.

I was quite surprised how well the PCs followed the ground stations in RegEM. Something I realized about the method – at least in this case – is that RegEM does not preserve the global covariance information. It seems to conserve it locally around the stations, but allows the gross overall covariance to change in accordance with the ground stations. That makes me more comfortable that this type of analysis – if performed properly – can yield useful results.

And as I said before, I think the concept is very clever. They seem to have been tripped up by confirmation bias.

Also, one other thing – if you look at both the sea ice anomalies and the model predictions shown by Steig – the reconstruction above matches them much much much better. I think it’s kind of interesting that it matches the models.

I checked the model results presented. It sure looks a hell of a lot better. It also like you said matches sea ice. Do you mind if I copy them to the bottom of the post?

Are you able to see your emails?

#3 Not exactly. Even PTTLS has a “model frame” – it just doesn’t return the frame.

The model frame is like the linear regression line. It represents the best fit of the data. In the case of RegEM PTTLS, the missing data come directly from the model frame. They are the regression line.

I’ve spent a bit of time trying to figure out how I can get the model frame out of PTTLS. My linear algebra skills are poor, so I’ve so far not been able to get anywhere with it. But if you look at the code for PTTLS, you can see that it computes a solution on the missing data explicitly while excluding the actual data (in the “pttls” subroutine). For the actual data, it computes “Sr”, which represents the difference between the model and the actual data. So all the information is there – I just haven’t figured out how to get it yet.

Steve’s PCA and the IPCA version compute the full solution all at once. They don’t explicitly measure the difference between the model and the actual data. This was one of Schneider’s criticisms of Steve’s version. Because it does not track the difference between the model and actual, it apparently does not converge to the maximum likelihood solution. In practice, though, this seems to be of little consequence as the results are nearly identical to Schneider’s PTTLS. I need to learn more about this.

However, the nice thing about the PCA and IPCA is that the model frames are directly accessible (matrix “Xmis”). The regular solution – the one that retains the actual data – is also returned as matrix “X”.

So there is indeed a model for the PTTLS version – I just haven’t figured out how to extract it yet.

#5 I can see the emails at home, but I can’t really reply to them. I can extract the relevant graphics from the Steig paper tomorrow and send them to you.

#6 I thought so, I just worded it poorly. I haven’t messed with the R version but my recollection is that there is a simple mask to chop the non-missing data from the imputed in matlab’s version. Matlab allows single stepping through the code.

Jeff-

Shoot me an e-mail in your spare time. After reading this, I had a suggestion for your site.

And thanks to Ryan for all of his hard work on this.

Thanks for giving such a detailed explanation of what you did. I’m not an expert statistician by any stretch of the imagination, so very detailed and clear explanations like this make these analyses easier for me to understand.

Has Nature offered you the cover, yet? LOL

I wondered where the comments went, WUWT picked up this post.

http://wattsupwiththat.com/2009/05/20/steig-et-al-antarctica-warming-paper-process-is-finally-replicated-and-dealt-a-blow-to-robustness/

I sent Anthony Watts an email last night so he could read it not expecting in any way that he would use it – only for his own reading. I’m glad it’s getting the attention this work deserves.

Ryan O, I need to review what you have done in more detail, but at this point it would appear to this layperson that you are well on your way to having the evidence and the ability to articulate a major and publishable critique of Steig et al. (2009). I like your dealing with the 1982 “splice”.

What I did not see is your re-reconstruction trend confidence limits. I think those limits are crucial to the warming issues, i.e. is the trend significantly different than zero, and if it is, at what limits? I believe the Steig authors were most interested in (1) showing the Antarctica Peninsula warming spreading to West Antarctica and Antarctica as a whole and (2) to be able to show a warming trend for Antarctica that was significantly different than zero – and using a convenient time period to show that that could be reasonably connected with another event, i.e. the initial use of ground stations on the Antarctica for recording temperatures.

I think most climate science papers strive heartily to show statistically significant trends, except perhaps when they want to show that the climate models cannot be shown to be different than the observed results. I suspect the difference between publishing or not can be in the ability to show of statistical significance. Of course, then a valid critique would be to show no statistical significance – otherwise it might be replied to your critique that you showed a flaw in the methods but the original result stands and therefore your critique is less important.

Ryan (and Jeff): Thank you again for the tireless work on this project. Ryan, I believe that you have shown definitively that low order processing of Steig was a major flaw and the analysis was not robust. Your homework on sattelite error and calibration is very impressive and appreciated. While Comiso’s cloud masking process is still a mystery, at least the “robustness” question does not appear to depend on this.

Higher order processing unshackles the process to allow for more defined temporal and spatial distribution of trend. With autocorrelation and Chladni patterns is it possible that some spurious correlations still remain? Would a scatterplot of distance correlations comparing Ryan’s analysis to surface stations be helpful at this point?

#12 Kenneth Fritsch

I would add a third item to your list – that being the interest in showing a general warming trend throughout the 50 year time period. IMO there would have been no paper (at least not on the cover of Nature) if Steig’s analysis showed a flat or cooling trend in the last 40 years.

Great job , Ryan and Jeffs

Interesting the RC hasn’t posted anything since May 7 (Tamino is cold since May 11, and both of the last blogs were political cheerleading for the AGW crowds pleasure).

Since both sites trumpeted Steig, you would think they would counter your analysis, if they had anything to counter it with.

Silence speaks great volumes.

#13 “With autocorrelation and Chladni patterns is it possible that some spurious correlations still remain?”

Sorry for the slow reply, it has been a busy day. I think it’s quite likely that there are oddities left but it may have been minimized. We’ll be looking at that in the near future.

Kenneth:

I did not calculate the confidence limits this time around because I was more interested in the geographical distribution of trends. From what I remember from the sensitivity tests earlier, they were about +/- 0.07, so the overall trend would not be statistically different from zero.

With that said, I think it is important to keep in mind that these confidence limits only account for the linear fit. They assume the PCA on the AVHRR data, the ability of the AVHRR data to substitute for ground data, the imputation of the ground stations, and the covariance are perfect. Any uncertainty in those items also increases the confidence limits.

Ryan O,

I am just asking, lol, but can you, will you,(jeffs too 🙂 )

use this talent to replicate USA temps with WUWT found CRN1 CRN2

stations? Please, please, pretty please! (pant, pant, wag tail) 😉

#15 Hal, in fact It is quite clear from Ryan’s detailed comments 369-375 on RCs ‘Antarctic warming is robust’ thread (following on from my 353) that they have no answer to this.

So Ryan and Jeff, when are you going to write all this up as a paper and submit it?

I would agree with the added uncertainties you mention here, Ryan, but can they be quantified – for the publication of your critique.

I hope that does not sound too TCOish.

#20 Not at all. I honestly do not know how to quantify some of those. The 1982 North paper on PCA suggests some ways to quantify the PCA portion, but I do not know how to quantify the imputation part. I think that such a task will require some outside assistance.

Cant get your code to work. The script keeps spitting the dummy at:

> reader.names=read.table(“manned.all.txt”, stringsAsFactors=FALSE)[[1]]

Error in file(file, “r”) : cannot open the connection

In addition: Warning message:

In file(file, “r”) :

cannot open file ‘manned.all.txt’: No such file or directory

Any suggestions?

If you go all the way down to the bottom of the code, there’s a section called “LOAD AND PARSE DATA”. The first command there reads:

#down.data()

Remove the # in front of it and run down.data(). That will scrape the BAS READER site for the surface temperatures. It saves them into your working directory, and then “l.data=load.data()” loads them into R.

Once you’ve done the download once, you can put the # back in front of down.data(). There’s no need to download the data again and again.

Thanks for the suggestion but I did uncomment the down.data() code. the problem is not downloading Steig’s data but the “manned.all.txt” and “aws.all.txt” files which are not on Steig’s site and there is no direction to them.

Oops! Replace this line:

reader.names=read.table(“manned.all.txt”, stringsAsFactors=FALSE)[[1]]

With this:

reader.names=all.idx[1:44, 1]

And this line:

reader.names=read.table(“aws.all.txt”, stringsAsFactors=FALSE)[[1]]

With this:

reader.names=all.idx[45:109, 1]

Sorry about that. It’s been through so many revisions . . .

Thanks for your help.

Congratulations on your excellent work, Ryan.

I worked out a few hours ago that I needed to make the changes to your script per #26 and then I saw your comment to that effect – I should have looked here first. 🙂

May I ask if the last line in the downloadable Recon.txt file, “regem.stats=get.stats.station(base, gnd.temps2)”, is meant to be the last line of your code? I was rather hoping that example code for a satellite PCs reconstruction producing one of your coloured countour trend maps would be included: my skills with R’s plotting functions are very poor and I have failed to reproduce any of your maps using your plt.map function!

I have been comparing the trends in the Reader .txt file data that now exist with those per the data in early February and that used by Steig. There are a number of ground stations where updated data (generally for more recent years) has led to measurable changes in trend. Some of the changes are quite large – e.g, for AWS GEO3 the 1957-2006 trend for the data used by Steig was +1.49 degrees C/ decade, but using the latest data it is only +0.35.

One minor query: you are treating Adelaide Island as an ocean station, but perhaps this is debatable as Adelaide Island virtually touches the Antarctic peninsula? Unusually for the peninsula, Adelaide Island did not have a positive temperature trend.

Nic, I will answer the script questions a bit later, but the Adelaide question is easy. It had a very short record length and there was a +1 deg C step in the middle of it that is not shared by any other station with overlapping data. Something’s wrong with the data, so I excluded it. It doesn’t matter too much, though . . . I did several reconstructions with it included and there wasn’t much difference.

Nic,

1: The last line. No, that’s not meant to be the last line. There’s a whole set of programming that I still need to do for the verification statistics. That’s just the last line at the time I sent Jeff this post.

2. Example code for the plots. The first thing you need to make sure is that you have packages “maps” and “mapproj” installed from CRAN. Then you need to run all of the code up to and including the line:

r.13=do.recon(dat=dat, base=base, PC.max=13, mode=”ipca”, base.impute=c(TRUE, 5000, 9, 0.01, 0.01), dat.impute=c(FALSE, 5000, 9, 0.01, 0.01))

That line actually does the recon. When the recon finishes, R will print out the following:

[2] Elements in returned list:

[3] =========================

[4] [[1]]: Reconstruction time series (using X)

[5] [[2]]: Reconstruction time series (using Xmis)

[6] [[3]]: Reconstruction overall monthly means time series (using X)

[7] [[4]]: Reconstruction overall monthly means time series (using Xmis)

[8] [[5]]: Imputed PCs

[9] [[6]]: Imputation results for the predictor matrix

[10] [[7]]: Imputation results for the PCs

[11] (If imputed separately)

[12] [[8]][[1]]: PCs as input into RegEM

[13] [[8]][[2]]: Left singular vectors (1 – PC.max)

[14] [[8]][[3]]: Right singular vectors (all)

[15] [[8]][[4]]: Eigenvalues (all)

[16] Trends using X:

[17] [[9]][[1]]: Linear trends ([1957, 1] to [1981, 12])

[18] [[9]][[2]]: Linear trends ([1957, 1] to [2006, 12])

[19] [[9]][[3]]: Linear trends ([1967, 1] to [2006, 12])

[20] [[9]][[4]]: Linear trends ([1982, 1] to [2006, 12])

[21] [[9]][[5]]: Linear trends ([1982, 1] to [1994, 7])

[22] [[9]][[6]]: Linear trends ([1982, 1] to [2000, 12])

[23] [[9]][[7]]: Linear trends ([1994, 6] to [2006, 12])

[24] [[9]][[8]]: Linear trends ([2000, 12] to [2006, 12])

[25] Trends using Xmis:

[26] [[10]][[1]]: Linear trends ([1957, 1] to [1981, 12])

[27] [[10]][[2]]: Linear trends ([1957, 1] to [2006, 12])

[28] [[10]][[3]]: Linear trends ([1967, 1] to [2006, 12])

[29] [[10]][[4]]: Linear trends ([1982, 1] to [2006, 12])

[30] [[10]][[5]]: Linear trends ([1982, 1] to [1994, 7])

[31] [[10]][[6]]: Linear trends ([1982, 1] to [2000, 12])

[32] [[10]][[7]]: Linear trends ([1994, 6] to [2006, 12])

[33] [[10]][[8]]: Linear trends ([2000, 12] to [2006, 12])

To make one of the maps, type:

plt.map(r.13[[9]][[1]])

Or whatever period interests you.

Nic,

3. Effects of READER changes: Almost nil. The overall difference when doing a Steig-style recon (3 PCs, regpar=3) is actually to increase the trend just a tad. But overall, the effect is negligible.

I wonder if Comiso will ever release the enhanced cloud mask methods. NOAA 16 looked to have problems in Jeff C.’s post here:

https://noconsensus.wordpress.com/2009/03/31/measuring-the-clouds/#more-3169

The difference plots (shown by Jeff) between NSIDC AVHRR and Steig’s AVHRR showed a substantial upward adjustment of temp anomalies in the NOAA 16 segment – enough that Ryan’s sat/station difference plots seemed ok for NOAA 16 segment. Does the quality of this process hold for all situations?

If Comiso did some type of ground truthing with the surface stations and failed to account for sattelite degradation, this could conciecably lead to complications. Unfortunately, it is speculation without the method.

Ryan

30. Thanks for the help – I should have figured that out. I have now spent more time going through your reconstruction code and understand better how it all fits together.

29 and 31. Noted. I am not surprised that the changes to the READER data have had little overall effect, but they do show that the data Steig used was even more imperfect than apparent earlier.

There is one thing that has been bugging me for some time which I have been unable to come up with an answer to. Probably I am being stupid or missing something, but in any event I would much appreciate any light you can shed on the issue.

My problem is that the 3 PCs incorporated in Steig’s reconstruction, ant_recon.txt, are not the same for 1982-2006 as the first 3 PCs resulting from prcomp or SVD on the raw (uncalibrated) cloudmasked AVHRR data (nor are they linear combinations of each other). As there is no missing data in the 3 PCs for that period, surely ant_recon should simply reflect the first 3 PCs from the AVHRR data, unaltered?

One can see that this is the case if you run a Steig AVHRR reconstruction, which I think can be done using your code with

clip=form.steig

dat=window(calc.anom(all.avhrr), start=c(1982), end=c(2006,12))

base=window(parse.gnd.data(all.gnd, form=clip), start=1957, end=c(2006,12))

base=calc.anom(base)

r.3=do.recon(dat=dat, base=base, PC.max=3, mode=”pttls”, base.impute=c(FALSE, 5000, 9, 0.01, 0.01), dat.impute=c(FALSE, 5000, 3, 0.01, 0.01))

The difference is evident from the continent wide average temperature trends /decade, of 0.119 for 1957-2006 and 0.245 for 1982-2006 per r.3. These compare with 0.118 and 0.260 for Steig’s ant_recon. Although the 1957-2006 average trends are almost identical, the 1982-2006 ones are significantly different, reflecting differences in the 1982-2006 PCs.

The all.avhrr (anomaly) data has an average trend of 0.261: I did wonder if Steig modified his 3 AVHRR PCs so that they produced the same trend in average temperature as the AVHRR data, but I can’t see that doing so could have produced all the effects seen.

Nic, these are things that have made us wonder, too. I suspect that the cloudmasked AVHRR placed on-line is slightly different than the actual version used in the paper.

Tamino’s ‘dangerous curves’ thread has a link to this article. Apparently they can’t even be bothered to look at results which demonstrate the clear problems with the paper. They don’t realize what a joke they are making themselves.

“Open Minds” indeed.

I’m impressed with the power of the ostrich response. Its apparently deeply ingrained in the lower cortex next to the knee jerk and herd mentality.

This post has been up for a week and I’ve been looking in vain for any kind of a response from the alarmist camp. I think they’re all hoping that if they pretend it isn’t there it will just disappear. Just out of curiosity, Jeff, has this article been getting more hits than the number of comments would indicate?

#38, It’s gotten quite a number of hits (1200 +) for a post picked up by WUWT. I usually get less hits here when a post runs over there. When a post runs at CA SteveM will usually put a link in which has run into thousands per day on a single post.

At WUWT it was #1 of all the WordPress posts for a bit – that includes CNN, FOX news and People magazine blogs. WUWT does that almost every day now though.

re: #16 Jeff Id

Jeff, back on March 26th you posted a reconstruction using the NSIDC sat data:

https://noconsensus.wordpress.com/2009/03/26/nsidc-sat-data-pca/

You suggested in the post that the ocean pixels broke the autocorrelation and consequently yielded non-Chladni pattern eigenvectors. Is there any reason to believe apriori that these ocean pixels should be excluded? Have you considered adding these ocean pixels back into the Steig/Comiso data?

1200 hits and 40 comments. Anyone care to make book on how many of those hits were Team members? I suspect that a week which included a long weekend is just not enough time to evaluate this work and they’re tying themselves into knots trying to formulate a riposte. Offer Dr. Steig a guest post. If we’re nice to him, who knows? Maybe he’ll come over to the Dark Side.

#40,

I haven’t considered adding them in, I’m not sure there’s any benefit. Jeff C was working on cleaning up the data, if he decides to finish it would be a more reasonable result. In it’s current state it was really noisy.

Согласен с автором . У меня точно такое же мнение 🙂