There is always something going on around here.

Up until now all the work which has been done on the antarctic reconstruction has been done without statistical verification. We believed that they are better from correlation vs distance plots, the visual comparison to station trends and of course the better approximation of simple area weighted reconstructions using surface station data.

The authors of Steig et al. have not been queried by myself or anyone else that I’m aware of regarding the quality of the higher PC reconstructions. And the team has largely ignored what has been going on over on the Air Vent. This post however demonstrates strongly improved verification statistics which should send chills down their collective backs.

Ryan was generous in giving credit to others with his wording, he has put together this amazing piece of work himself using bits of code and knowledge gained from the numerous other posts by himself and others on the subject. He’s done a top notch job again, through a Herculean effort in code and debugging.

If you didn’t read Ryan’s other post which led to this work the link is:

Antarctic Coup de Grace

——————————————————————————–

HOW DO WE CHOOSE?

In order to choose which version of Antarctica is more likely to represent the real 50-year history, we need to calculate statistics with which to compare the reconstructions. For this post, we will examine r, r^2, R^2, RE, and CE for various conditions, including an analysis of the accuracy of the RegEM imputation. While Steig’s paper did provide verification statistics against the satellite data, the only verification statistics that related to ground data were provided by the restricted 15-predictor reconstruction, where the withheld ground stations were the verification target. We will perform a more comprehensive analysis of performance with respect to both RegEM and the ground data. Additionally, we will compare how our reconstruction performs against Steig’s reconstruction using the same methods used by Steig in his paper, along with a few more comprehensive tests.

To calculate what I would consider a healthy battery of verification statistics, we need to perform several reconstructions. The reason for this is to evaluate how well the method reproduces known data. Unless we know how well we can reproduce things we know, we cannot determine how likely the method is to estimate things we do not know. This requires that we perform a set of reconstructions by withholding certain information. The reconstructions we will perform are:

1. A 13-PC reconstruction using all manned and AWS stations, with ocean stations and Adelaide excluded. This is the main reconstruction.

2. An early calibration reconstruction using AVHRR data from 1982-1994.5. This will allow us to assess how well the method reproduces the withheld AVHRR data.

3. A late calibration reconstruction using AVHRR data from 1994.5-2006. Coupled with the early calibration, this provides comprehensive coverage of the entire satellite period.

4. A 13-PC reconstruction with the AWS stations withheld. The purpose of this reconstruction is to use the AWS stations as a verification target (i.e., see how well the reconstruction estimates the AWS data, and then compare the estimation against the real AWS data).

5. The same set of four reconstructions as above, but using 21 PCs in order to assess the stability of the reconstruction to included PCs.

6. A 3-PC reconstruction using Steig’s station complement to demonstrate replication of his process.

7. A 3-PC reconstruction using the 13-PC reconstruction model frame as input to demonstrate the inability of Steig’s process to properly resolve the geographical locations of the trends and trend magnitudes.

–

Using the above set of reconstructions, we will then calculate the following sets of verification statistics:

–

1. Performance vs. the AVHRR data (early and late calibration reconstructions)

2. Performance vs. the AVHRR data (full reconstruction model frame)

3. Comparison of the spliced and model reconstruction vs. the actual ground station data.

4. Comparison of the restricted (AWS data withheld) reconstruction vs. the actual AWS data.

5. Comparison of the RegEM imputation model frame for the ground stations vs. the actual ground station data.

–

The provided script performs all of the required reconstructions and makes all of the required verification calculations. I will not present them all here (because there are a lot of them). I will present the ones that I feel are the most telling and important. In fact, I have not yet plotted all the different results myself. So for those of you with R, there are plenty of things to plot.

Without further ado, let’s take a look at a few of those things.

You may remember the figure above; it represents the split reconstruction verification statistics for Steig’s reconstruction. Note the significant regions of negative CE values (which indicate that a simple average of observed temperatures explains more variance than the reconstruction). Of particular note, the region where Steig reports the highest trend – West Antarctica and the Ross Ice Shelf – shows the worst performance.

Let’s compare to our reconstruction:

There still are a few areas of negative RE (too small to see in this panel) and some areas of negative CE. However, unlike the Steig reconstruction, ours performs well in most of West Antarctica, the Peninsula, and the Ross Ice Shelf. All values are significantly higher than the Steig reconstruction, and we show much smaller regions with negative values.

As an aside, the r^2 plots are not corrected by the Monte Carlo analysis yet. However, as shown in the previous post concerning Steig’s verification statistics, the maximum r^2 values using AR(8) noise were only 0.019, which produces an indistinguishable change from Fig. 3.

Now that we know that our method provides a more faithful reproduction of the satellite data, it is time to see how faithfully our method reproduces the ground data. A simple way to compare ours against Steig’s is to look at scatterplots of reconstructed anomalies vs. ground station anomalies:

Your browser may not support display of this image.

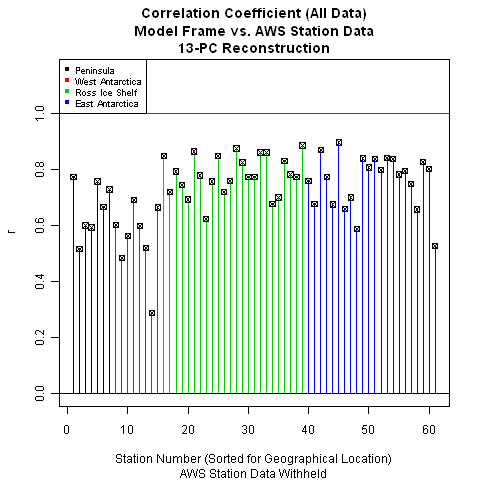

The 13-PC reconstruction shows significantly improved performance in predicting ground temperatures as compared to the Steig reconstruction. This improved performance is also reflected in plots of correlation coefficient:

As noted earlier, the performance in the Peninsula , West Antarctica, and the Ross Ice Shelf are noticeably better for our reconstruction. Examining the plots this way provides a good indication of the geographical performance of the two reconstructions. Another way to look at this – one that allows a bit more precision – is to plot the results as bar plots, sorted by location:

The difference is quite striking.

While a good performance with respect to correlation is nice, this alone does not mean we have a “good” reconstruction. One common problem is over-fitting during the calibration period (where the calibration period is defined as the periods over which actual data is present). This leads to fantastic verification statistics during calibration, but results in poor performance outside of that period.

This is the purpose of the restricted reconstruction, where we withhold all AWS data. We then compare the reconstruction values against the actual AWS data. If our method resulted in overfitting (or is simply a poor method), our verification performance will be correspondingly poor.

Since Steig did not use AWS stations for performing his TIR reconstruction, this allows us to do an apples-to-apples comparison between the two methods. We can use the AWS stations as a verification target for both reconstructions. We can then compare which reconstruction results in better performance from the standpoint of being able to predict the actual AWS data. This is nice because it prevents us from later being accused of holding the reconstructions to different standards.

Note that since all of the AWS data was withheld, RE is undefined. RE uses the calibration period mean, and there is no calibration period for the AWS stations because we did the reconstruction without including any AWS data. We could run a split test like we did with the satellite data, but that would require additional calculations and is an easier test to pass regardless. Besides, the reason we have to run a split test with the satellite data is that we cannot withhold all of the satellite data and still be able to do the reconstruction. With the AWS stations, however, we are not subject to the same restriction.

With that, I think we can safely put to bed the possibility that our calibration performance was due to overfitting. The verification performance is quite good, with the exception of one station in West Antarctica (Siple). Some of you may be curious about Siple, so I decided to plot both the original data and the reconstructed data. The problem with Siple is clearly the short record length and strange temperature swings (in excess of 10 degrees), which may indicate problems with the measurements:

While we should still be curious about Siple, we also would not be unjustified in considering it an outlier given the performance of our reconstruction at the remainder of the station locations.

Leaving Siple for the moment, let’s take a look at how Steig’s reconstruction performs.

Not too bad – but not as good as ours. Curiously, Siple does not look like an outlier in Steig’s reconstruction. In its place, however, seems to be the entire Peninsula. Overall, the correlation coefficients for the Steig reconstruction are poorer than ours. This allows us to conclude that our reconstruction more accurately calculated the temperature in the locations where we withheld real data.

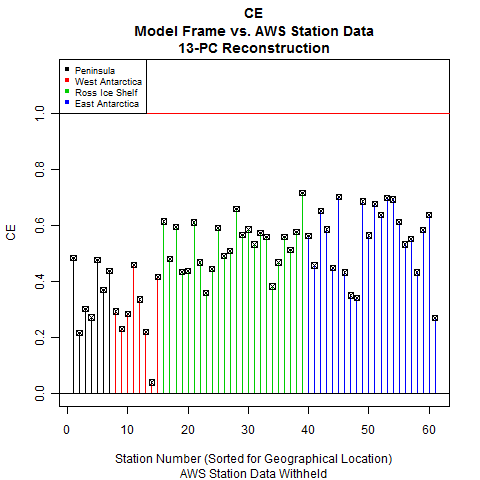

Along with correlation coefficient, the other statistic we need to look at is CE. Of the three statistics used by Steig – r, RE, and CE – CE is the most difficult statistic to pass. This is another reason why we are not concerned about lack of RE in this case: RE is an easier test to pass.

Your browser may not support display of this image.

The difference in performance between the two reconstructions is more apparent in the CE statistic. Steig’s reconstruction demonstrates negligible skill in the Peninsula, while our skill in the Peninsula is much higher. With the exception of Siple, our West Antarctic stations perform comparably. For the rest of the continent, our CE statistics are significantly higher than Steig’s – and we have no negative CE values.

So in a test of which method best reproduces withheld ground station data, our reconstruction shows significantly more skill than Steig’s.

The final set of statistics we will look at is the performance of RegEM. This is important because it will show us how faithful RegEM was to the original data. Steig did not perform any verification similar to this because PTTLS does not return the model frame. Unlike PTTLS, however, our version of RegEM (IPCA) does return the model frame. Since the model frame is accessible, it is incumbent upon us to look at it.

Note: In order to have a comparison, we will run a Steig-type reconstruction using RegEM IPCA.

There are two key statistics for this: r and R^2. R^2 is called “average explained variance”. It is a similar statistic to RE and CE with the difference being that the original data comes from the calibration period instead of the verification period. In the case of RegEM, all of the original data is technically “calibration period”, which is why we do not calculate RE and CE. Those are verification period statistics.

Let’s look at how RegEM IPCA performed for our reconstruction vs. Steig’s.

As you can see, RegEM performed quite faithfully with respect to the original data. This is a double-edged sword; if RegEM performs too faithfully, you end up with overfitting problems. However, we already checked for overfitting using our restricted reconstruction (with the AWS stations as the verification target).

While we had used regpar settings of 9 (main reconstruction) and 6 (restricted reconstruction), Steig only used a regpar setting of 3. This leads us to question whether that setting was sufficient for RegEM to be able to faithfully represent the original data. The only way to tell is to look, and the next frame shows us that Steig’s performance was significantly less than ours.

The performance using a regpar setting of 3 is noticeably worse, especially in East Antarctica. This would indicate that a setting of 3 does not provide enough degrees of freedom for the imputation to accurately represent the existing data. And if the imputation cannot accurately represent the existing data, then its representation of missing data is correspondingly suspect.

Another point I would like to note is the heavy weighting of Peninsula and open-ocean stations. Steig’s reconstruction relied on a total of 5 stations in West Antarctica, 4 of which are located on the eastern and southern edges of the continent at the Ross Ice Shelf. The resolution of West Antarctic trends based on the ground stations alone is rather poor.

Now that we’ve looked at correlation coefficients, let’s look at a more stringent statistic: average explained variance, or R^2.

Using a regpar setting of 9 also provides good R^2 statistics. The Peninsula is still a bit wanting. I checked the R^2 for the 21-PC reconstruction and the numbers were nearly identical. Without increasing the regpar setting and running the risk of overfitting, this seems to be about the limit of the imputation accuracy.

Steig’s reconstruction, on the other hand, shows some fairly low values for R^2. The Peninsula is an odd mix of high and low values, West Antarctica and Ross are middling, while East Antarctica is poor overall. This fits with the qualitative observation that the Steig method seemed to spread the Peninsula warming all over the continent, including into East Antarctica – which by most other accounts is cooling slightly, not warming.

CONCLUSION

With the exception of the RegEM verification, all of the verification statistics listed above were performed exactly (split reconstruction) or analogously (restricted 15 predictor reconstruction) by Steig in the Nature paper. In all cases, our reconstruction shows significantly more skill than the Steig reconstruction. So if these are the metrics by which we are to judge this type of reconstruction, ours is objectively superior.

As before, I would qualify this by saying that not all of the errors and uncertainties have been quantified yet, so I’m not comfortable putting a ton of stock into any of these reconstructions. However, I am perfectly comfortable saying that Steig’s reconstruction is not a faithful representation of Antarctic temperatures over the past 50 years and that ours is closer to the mark.

NOTE ON THE SCRIPT

If you want to duplicate all of the figures above, I would recommend letting the entire script run. Be patient; it takes about 20 minutes. While this may seem long, remember that it is performing 11 different reconstructions and calculating a metric butt-ton of verification statistics.

There is a plotting section at the end that has examples of all of the above plots (to make it easier for you to understand how the custom plotting functions work) and it also contains indices and explanations for the reconstructions, variables, and statistics. As always, though, if you have any questions or find a feature that doesn’t work, let me know and I’ll do my best to help.

Lastly, once you get comfortable with the script, you can probably avoid running all the reconstructions. They take up a lot of memory, and if you let all of them run, you’ll have enough room for maybe 2 or 3 more before R refuses to comply. So if you want to play around with the different RegEM variants, numbers of included PCs, and regpar settings, I would recommend getting comfortable with the script and then loading up just the functions. That will give you plenty of memory for 15 or so reconstructions.

As a bonus, I included the reconstruction that takes the output of our reconstruction, uses it for input to the Steig method, and spits out this result:

The name for the list containing all the information and trends is “r.3.test”.

—————————————————————-

Code is here Recon.R

Question: Why do your reconstructions have twice as many ground data stations as Steig’s for the r and R^2 verifications? [Especially since you both seem to have the same number for the other reconstructions.]

Ryan, this is really excellent analysis.

One observation on the number of PCs – and this goes back to the Chladni figures. If PCs are used (and I’m not sure that this was that good a decision in the first place), you have to have enough Chladni terms to resolve the Peninsula – otherwise the Peninsula, with its high concentration of samples, gets spread around.

In a way, this is reminiscent of something that you have to watch out for in gold mining promotions. You get small high-grade nuggety zones in an ore body. Promoters like to spread these things out to make the ore body look bigger, but when you go underground, wise old owls know that the high grade zone is likely to be smaller than drawn on the maps (and the low grade zones are likely to be bigger.)

I can just imagine the hysterics if you tried to do an ore reserve using 3 PCs. Steig’s thing isn’t a whole lot different.

In this example, we all realized pretty quickly that Steig was spreading the Peninsula around Antarctica. But it’s really very impressive to see that a reconstruction in which the Peninsula is resolved trumps the 3-PC reconstruction on all counts. It’s hard not to imagine that they didn’t do runs retaining more than 3 PCs. Perhaps they are in the CENSORED directory.

In light of your analysis here, their excuse for not resolving the Peninsula is pretty hollow:

Metric butt-ton? LOL… I’ll have to start reporting results in those units program management 🙂

Awesome work as usual, BTW.

In MBH98, they report the following procedure for how many eigenvectors to retain:

I’m not exactly sure how they implemented this; I may have some notes on this from a few years ago. Despite the request from the House Energy and Commerce Committee to produce MBH code, Mann withheld this (and some other) aspects of the code.

However, my guess is that your results here would probably fit within the framework of MBH98 – also a Nature article.

Jeff ID. In your introductory remarks, you state: “The authors of Steig et al. have not been queried by myself or anyone else that I’m aware of regarding the quality of the higher PC reconstructions. And the team has largely ignored what has been going on over on the Air Vent.”

Given that the Steig et al paper was published in Nature, would not an alternative be to raise it at their blog Climate Feedback? http://blogs.nature.com/climatefeedback/

Their abridged blog policy suggests that they encourage feedback and discussion:

I thought it sweet that their point 4 demonstrates their concern for correct spelling, yet in the very first line of the policy, they mispell ‘abridged’.

Ryan O.–

Thank you for this! I find it eminently readable and fascinating. I hope you keep up this level of research and writing. Very communicative, IMO.

All I can say is beautiful work. Hold their feet to the fire, it’s the only way to improve the quality of data accepted in the future. Too bad they don’t do all this hard work themselves.

#1 Steig only used 42 stations to perform his reconstruction. I used 98, since I included AWS stations.

Nature reviewers and editors have obviously not done their homework in allowing the Steig et al. paper go through and have somehow revealed an unacceptable bias in making it their cover pic.

Thus although tempting, their blog is of little comfort as far as getting as much MSM coverage. As McIntyre has mentioned many times, comments on articles tend to be published by the journals a long time after everyone has moved on. What is needed is serious PR so the Steig et al. paper, Nature and those associated with this failure would be exposed to the scientific community -your blogs do that- but also to the public at large who has been deceived by the MSM. Time to make the MSM aware of this spurious trends that are not “unprecedented”…

Err… Can I claim that my misspelling of misspell was intended to be ironic?

You people at these skeptics blogs all you do is point to errors in reconstructions, but you never do your own reconstructions. Um, well, wait a minute here. OK you did your own reconstruction, but lets see now, oh yeah, you people at these skeptics blogs never publish your reconstructions in a peer reviewed paper – or never get them published.

RE is normally designated as the calibration statistic, but CE is used as the verfication or validation statistic, so what am I missing here.

My apologies to Craig Loehle and Hu McCullough, but I suspect that the rant above would not let one reconstruction get in the way.

Thanks for providing this. We all appreciate your hard work!

RE: #11 Sure – no prob!

Outstanding. I think you have enough good stuff now to move toward publication with a theme of disproving Steig et al. The question is: do you keep going from this point to unravel the cloud masking and other open questions – with the idea of ultimately presenting an alternative which can be presented with confidence?

This is off topic, but this “framing” thing by scientists has me peeved. Michael Mann is involved.

http://sciencepolicy.colorado.edu/prometheus/purists-who-demand-that-facts-be-correct-5309

Nice to know that the general public is so stupid that “framing” is needed in the first place!

Good work. It needs to be published in a decent journal (ie not E & E). If it doesn’t get published then it won’t have any impact. About reviewing: I have been a reviewer for lots of journals, including Nature, on climate science papers. I agree that things slip through reviewers, but my take on this is that good papers will always stand the test of time; bad papers won’t. A reviewer only has a relatively short period of time to go through the details of a paper (we are all busy)…in the end it sometimes seems like a subjective judgement on someone’s work. The real sifting between ideas occurs after the papers are published.

Question: If you are disallowing ocean stations, then you should you not also be disallowing all stations within x km of the coast where x is the average interpolation distance, as any stations within that range are being influenced by oceanic data as well?

#12 The only difference between RE and CE is that CE uses (original – mean.ver) and RE uses (original – mean.cal) in the denominator. The residuals (the numerator) come from the verification period for both.

.

Basically, RE compares your verification performance vs. the calibration period mean, and CE compares it vs. the verification period mean. People tend to refer to RE as a “calibration period” statistic, but it’s really not. Like you pointed out earlier, it’s more of a test for non-stationarity.

.

The statistics that relate only to calibration periods and have no information from verification periods are “r” and “R”.

Ryan O, you made me go back to Burger and Cubasch to see that you are correct, the numerator comes from the verification period – only. You seem to know your way around these reconstruction processes very well and can articulate those methods so that those of us that have not been doing them can follow the story line. I appreciate the time you have taken to do just that.

In the meantime I will be checking your work for spelling and grammatical errors and, if I can find where TCO is hiding, I might consult with him on how to proceed here.

#16 San Quniton

Good papers indeed “stand the test of time”, as well they should. But what are the chances that a paper from Ryan and the Jeff’s who are “A couple of dudes with some fancy pictures and nary a publication between them” will be allowed to publish a paper which challenges a cover story by the “Team”. It is not unlikely that a team member would be one of the reviewers.

Nature is where this article should be published. It would be a chance for the journal to show the world that their review processes are fair and sound, and that the advancement of science is paramount.

An aside: Jeff can you put up a tip jar to go toward publication costs?

#20, I have published in a different field. After seeing the result of the SteveM publication rejection, I’m getting the feeling efforts toward publication will be a lot of work with a bunch of advocates for referees. Steve linked his paper on line and has shared some of the comments, to put it mildly, it ain’t too good. Not that I would let the advocates determine my own course of action.

#21 hence the PR blitz needed to make the MSM notice. If Greenpeace makes it why can’t we?

#21 Jeff

I think that the premise that “Stieg is not robust” gets more and more clear with every post. If Nature won’t publish this then another journal will. If I were an editor at Nature and concerned about the long term, I would not want to be percieved as having stonewalled legitimate science.

I think Ryan O may have made a mistake in his update at WUWT — ““Overall, Antarctica has warmed from 1957-2006. There is no debating that point. (However, other than the Peninsula, the warming is not statistically significant. )”

Number crunchers have a tendency to get so immersed in their numbers that they take on a bit too much certainty. There are so few stations in the interior of the continent and there are such large problems with the instruments and the sat readings that it is not possible to say whether there has been interior warming or cooling. The trend is so small and the data simply isn’t accurate enough or complete enough in coverage to make any informed judgment.

The statements by Trenberth, Christy and Pielke in response to the release of the paper still apply. http://epw.senate.gov/public/index.cfm?FuseAction=Minority.Blogs&ContentRecord_id=fc7db6ad-802a-23ad-43d1-2651eb2297d6

The most important criticism of Steig and Mann is not that they do lousy statistics. It is that the study was irresponsible in the first place. Regardless of whether they used the optimum number of PCs or not, there isn’t enough reliable data to make any definitive statement.

Certainly the Steig reconstruction would show the Antarctica warming overall from 1957-2006 with statistical significance, but not warming from the late 1960s to 2006 with a trend significantly different than zero. The Peninsula shows significant warming over the entire time period as I recall, but the Peninsula is only a few percent points of the overall Anarctica area.

It would be interesting to see what the warming/cooling would be had Steig included his own ice core studies from the 1935-1945 period which showed that period to be the warmest decade of the 20th century in the West Anarctica.

I would agree with Ryan O that the most model trends will show warming in the

1957-2006 period, but to me the important point is whether those trends are significantly different than zero.

Remember without statistically significant warming in the Antartica overall (from some point in time to present) and not just in the Peninsula, the Steig reconstruction is old news and not a cover story for Nature.

Stan,

Not a mistake; I was just plain wrong. 🙂 The intended point was that all the reconstructions we’ve done show warming, not cooling. However, as you correctly point out, not only are there significant quantified uncertainties, there are also significant unquantified ones. Saying that Antarctica has warmed – with no caveats – is an unsupportable statement.

Your second paragraph is quite apt. 😉

Kenneth – hadn’t thought of looking at his ice cores yet. 🙂 Depending on what they show, the irony may increase.

Very nice work indeed. You and the Jeffs get a standing ovation from me. Thank you for your time and dedication to this effort.

Looks like the Professor is back …

http://www.realclimate.org/index.php/archives/2009/06/on-overfitting/

re https://noconsensus.wordpress.com/2009/05/28/verification-of-the-improved-high-pc-reconstruction/#comment-5938 (Steve #5)

“I’m not exactly sure how they implemented this; I may have some notes on this from a few years ago. ”

At least we know that their search was not exhaustive, as I found another subset with larger RE. They used [1 2 3 4 5 7 9 11 14 15 16], but [ 1 2 3 4 5 7 8 9 11 12 13] works better.

( if someone wants to verify, see the lines 573-576 in

http://signals.auditblogs.com/files/2008/07/hockeystick1.txt )

2 points:

1. Your figures show long-term warming over most of Antarctica, correct?

2. Why did you pre-calibrate the satellite data to the ground data before using the satellite data to verify the ground data? Doesn’t this front-load your conclusion into the analysis?

Why is the period from 1957 to 2006 used? I know that is what Steig published, but the entire AGW arguments always select 1970 to 2000 or even 1970 to 2006 as the period showing unusual global heating. I would like to see the trend in that range of time for a comparison with the global claims.