by – Ryan ODonnell

I had made a post concerning the sensitivity of the S09 reconstruction with respect to changes in trend for the underlying surface stations HERE [insert link]. That post was a bit rushed, and the level of explanation I provided was insufficient. Bishop Hill did a better job of explaining it HERE [insert link], and I will use his piecemeal approach here, as it presents the issue very clearly.

The previous post was also incomplete. It did not show how our reconstruction method responds to similar perturbations. I will now rectify that situation.

The sensitivity tests are relatively simple:

1. Add various trends to the raw Peninsula station data

2. Subtract various trends from the raw Peninsula station data

3. Add various trends to the raw spliced Byrd data and Russkaya data

4. Subtract various trends from the raw spliced Byrd data and Russkaya data

For the S09 case, I simply spliced the Byrd data with no offset. For our reconstructions, I spliced them together using the nominal offset determined by RegEM from the original reconstruction.

The purpose of this test is to determine which reconstruction is most responsive to changes in the underlying data. If the reconstruction responds “poorly” (in other words, does not properly respond), then one can conclude that the method results in a reconstruction that is not a “good” representation of the data. If the reconstruction responds “well”, the one can conclude that – for the cases tested – the reconstruction appears to represent the data “well”. Since “good” and “well” are qualitative, rather than try to assign criteria to them, we will simply compare whether the S09 reconstruction or ours does better.

First, let’s take a look at where these stations are:

In the above graphic, the circles represent the stations used by S09 as predictors. The crosses represent stations used by us as predictors. The triangles represent stations not used as predictors for either study, but were used by us for additional verification. Now that we know where the stations are, let’s take a look at the original S09 reconstruction, and our newly published one:

EXPERIMENT 1: ADDING TREND TO PENINSULA STATIONS

For this experiment, we will add trends to the Peninsula stations, which are the stations in the light purple from the first graphic. Let’s see what happens when we do this:

When we do this, we note an interesting thing: adding a trend to the Peninsula stations using the S09 method results in an increase in reconstruction warming in West Antarctica. In fact, the change in the West Antarctic warming (+0.07) is greater than the change in the Peninsula warming (+0.05), and very nearly equal to the change in the East Antarctic warming (+0.04).

Now what happens to our reconstruction? Well, the change in warming in West Antarctica is only +0.01, there is no change in the East Antarctic trend, and the Peninsula trend increases by +0.12. At the actual station locations, the trend is +0.18 . . . which is quite close to the +0.20 we added to the raw data. It is quite clear that our method captures both the magnitude and location of the change much better than the S09 method.

These are slightly different than the results I posted in the “Steig’s Trick” thread, because earlier I added the trends after infilling the missing station data, and then fed these back into RegEM for the reconstruction. As the reconstruction method technically includes the infilling step, a more accurate sensitivity test is to add the trends prior to infilling, which was what I have done above.

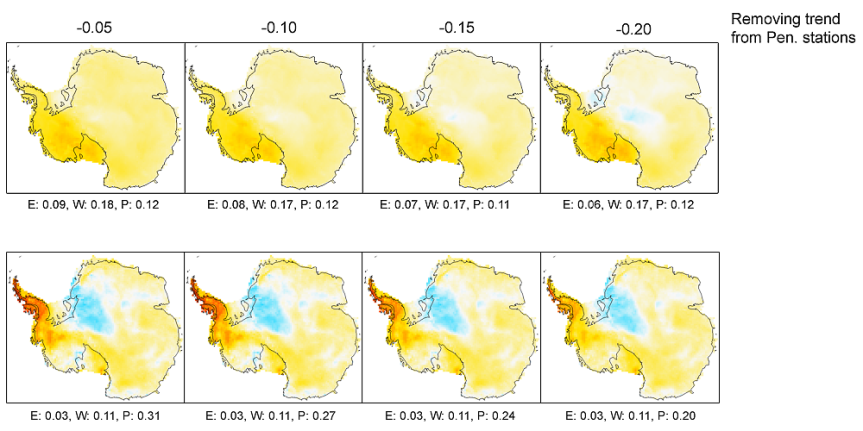

EXPERIMENT 2: SUBTRACTING TREND FROM PENINSULA STATIONS

For this experiment, we will subtract trends to the Peninsula stations. Let’s see what happens when we do this:

When we subtract trend from the Peninsula stations, we can note similar, interesting things. For the S09 reconstruction, the Peninsula and West Antarctic trends hardly change at all – a net of 0 for the Peninsula for the experiment, and a net of -0.03 for West. The biggest change actually occurs in East Antarctica . . . where the trend is nearly halved.

For our reconstruction, however, the trend in both East Antarctica and West Antarctica is unchanged. The only regional trend that changes is the Peninsula trend, by -0.15.

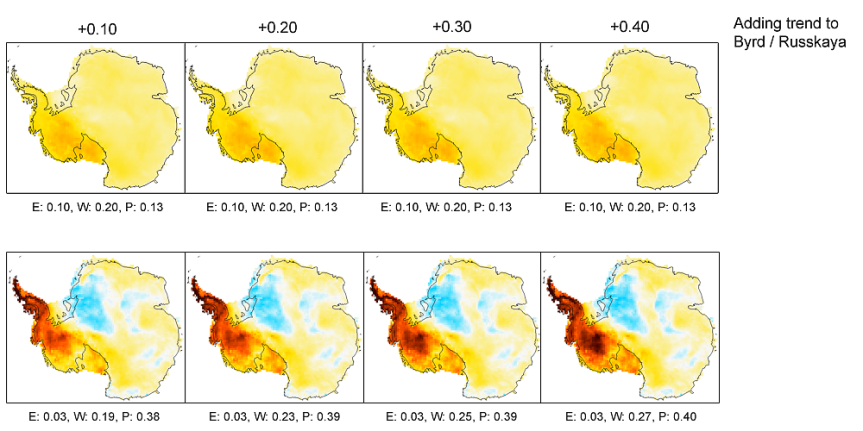

EXPERIMENT 3: ADDING TREND TO BYRD / RUSSKAYA

For this experiment, we will attempt to determine how well the reconstructions respond to changes in the West Antarctic data. We will do this by adding trends to Russkaya and Byrd. Let’s examine what happens:

As before, it is quite clear that the S09 method responds poorly. In this case, adding significant trends to West Antarctic stations results in . . . well, no change at all.

For our reconstructions, however, the change is quite dramatic . . . and highlights the problem with the way the AVHRR spatial structure is ignored in S09. By regressing solely against the temporal information in the satellite data – without accounting for where that information primarily came from geographically, the S09 reconstruction cannot properly locate the trend. In our case, because we explicitly use the satellite spatial structure in the regression, the spatial sensitivity is rather good.

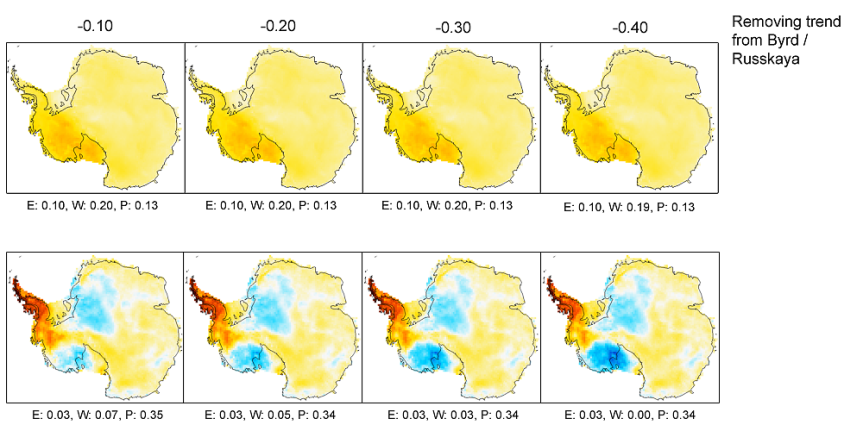

EXPERIMENT 4: SUBTRACTING TREND FROM BYRD / RUSSKAYA

Continuing with our attempt to determine how well the reconstructions respond to changes in the West Antarctic data, let’s look at what happens when we subtract trends from the West Antarctic stations:

In this case, the S09 reconstruction does eventually respond . . . with the West Antarctic trend dropping by 0.01 after a -0.40 trend is added to the West Antarctic station data. However, one would have a hard time qualifying that as a “good” response.

For our reconstruction, the response is quite good. If a -0.40 trend is added to Byrd and Russkaya, the West Antarctic trend drops to 0, and the point estimate at Byrd is about -0.25.

At this point, I will reprint something I said to Gavin at RealClimate here on March 3rd, 2009:

The comment about whether 3 or 4 PCs would have changed anything is irrelevant. The only relevant question to ask is “How many PCs would be required to properly capture the geographic distribution of temperature trends?” with the followup question of, “Does this number of PCs result in inclusion of an undesirable magnitude of mathematical artifacts and noise?”

If the answer to the followup is “yes”, then the conclusion is that the analysis is not powerful enough to geographically discriminate temperature trends on the level claimed in the paper. It doesn’t mean the authors did anything wrong or inappropriate in the analysis (I am NOT saying or implying anything of the sort). It simply means that the conclusion of heretofore unnoticed West Antarctic warming overreaches the analysis.

In answer to your question about how much variation they need to explain, that is entirely dependent on the level of detail they want to find. If they wish to have enough detail to properly discriminate between West Antarctic warming and peninsula warming, then they must use an appropriate number of PCs. If they wish to simply confirm that, on average, the continent appears to be warming without being able to discriminate between which parts are warming, then the number of PCs required is correspondingly less.

It should be titled — the Antarctic, a Picture Story.

Or “My Pet Antarctica”.

The links are missing, they should be these two I believe:

1. http://climateaudit.org/2011/02/08/coffin-meet-nail/

2. http://bishophill.squarespace.com/blog/2011/2/8/steigs-method-massacred.html

In Experiment 1, text currently reads

As I interpret it, 0.05 (left), 0.10 (center), or 0.15 (right) degree synthetic trends were added to the raw data. Typo or misunderstanding?

Just a detail: In exp 1 you seem to report the change in trend with respect to the +0.05 trend, rather than w.r.t. to the trend without having artificially added anything to it.

I.e. it makes more sense to say “the Peninsula trend increases by +0.15” in OD10 when an artificial trend of +0.2 is added to the Peninsula (rather than your quoted 0.12).

Those graphs are pretty convincing overall.

Ryan,

I’ve got your code working now, and I’ve experimented with Steig’s method using more than 3 PC’s. I’ve done just one trend case (the one you have perturbed here), but it does make a difference, and the trend plot looks a lot more like yours. I’ll try to do the sensitivity test.

typo: “the one can conclude that – for the cases tested ” = “then one can conclude …”

S09 is a one-trick pony. It is designed to smear the Penninsula change over the whole continent.

Re: AMac (Feb 17 00:09),

Ah. The first graphic has two images of Antarctica side-by-side.

The second through fifth graphics are four Antarctica-images wide.

Firefox’s settings had masked the rightmost column of Antarcticas, leading to my confusion. Others might have similar problems.

Nick,

Comment left at your place.

Nick – Thank you for taking the time for doing these sensitivity tests. This is perhaps the starkest demonstration of the differences between the S09 and O10 methods and clearest demonstration that, whether or not it results in the “true” temperature reconstruction, the O10 method is clearly better than the S09 method.

Maybe we should grab Bill Cosby with some crayons and pencils to help make things more clear to the RC crowd.

/obscure but relevant.

//Cosby never used crayons or pencils in that show, always dry-erase… I was lied to as a child.

A quick comment/question:

1) There is a circle-triangle combination in East Antarctica there. The existence of circle/triangle is contradictory to your explanation of what those symbols mean.

2) You guys do seem to have better spatial distribution of your sites overall, just based on your map here. However, why are there so many triangles on the west side? Why were those sites excluded? It looks like you used them for verification, but why not include them for better spatial resolution?

Triangles should be “not used as predictors for our study”, though some were used by S09. We only included as predictors stations with at least 96 months of data because, if the data is less than that, you start getting significant instability in the regressions. Unfortunately, the spatial distribution of stations with > 96 months is not even. It was a tradeoff.

There are a lot of old posts prior to any serious musings of an O10 paper which are interesting to look back on. This post of Roman’s definitely falls into the “simple and elegant” category IMO, and fits nicely with your post here Ryan.

Ryan – I apologize for thanking Nick when I meant you. I think it was because I had just read your post above mine.

You can thank Nick, too. He’s doing them as well. It will be interesting to see whether he ends up with the same conclusions we did.

RyanO, you do a great job of explaining what you have done and this is another example of that. You are too hard on yourself.

The sensitivity testing you have provided would have to be the proverbial straw for anyone with doubts of S(09) redistributing Peninsula warmth and doing it by, themselves, using the code and applying it with these tests. It also shows that there are methods/algorithms that do not spread the warming so we have a positive as well as a negative result..

When will those defending S(09) be able to deal with the results of these sensitivity tests? It is obvious that Steig does not want to lose his grip on West Antarctica warming and showing that the S(09) West Antarctica warming was spread from the Peninsula has obviously been the most difficult pill to swallow.

I would think that climate scientists would be rather quick to see the consequences of what you have shown here. Scientist/advocates, on the other hand, might well want now to blow up the whole foundation of both S(09) and O(10) (not really O(10) because it’s premised on methodologies) by discrediting AVHRR data and/or station data.

Kenneth,

If folks wish to blow up both papers, that is fine by me – so long as the arguments are plausible. That is how science works. The point of our paper was to try to prevent someone else duplicating the S09 method in the future. Blowing up both papers accomplishes that.

In fact, in our concluding paragraph, we invite that very opportunity. There is something wrong with the AVHRR data. We did what we felt was best to minimize the impact of those errors. Nevertheless, the errors exist. It is quite possible that those (as yet fully categorized) errors in the AVHRR data really do render both analyses incorrect.

If so, then one could always re-run our method with the new data to provide a better result . . . which, I suppose, is one advantage over S09.

Ryan,

Many thanks for investing the time to do this. It pretty well answers the question of model fidelity, methinks. One small remaining issue will be the optimum number of PC’s when otherwise following S(09). If Nick can find the number of PC’s with S(09) methodology that maximizes fidelity to manually added trends (like you show above), then there may not be much more to discuss. Are you taking bets on if there will be a formal comment from the S(09) authors on O(10) at JoC?

Steve,

Nope . . . not betting on that one. There may be. There may not be.

Kenneth, might this be yet another item to add to your list of stuff to run through your distance correlation scripts – pairwise correlation comparison of anarctic surface station data vs corresponding AVHRR grid points? This would definitely elucidate Ryan’s point.

Ryan,

“There may be. There may not be.”

Yes, but this post probably made it less likely. Nick’s efforts may make it less likely yet.

Actually: A Tale of Two Antarcticas

RyanO–

For clarification: when you say you add a trend to the peninsula stations, you mean you add trends to ground data only, and hold satellite data constant. Right?

Lucia,

Yep! The satellite data is left entirely unaltered in these experiments.

Nice work Ryan (and Nick). It’s great to see discussion focussing on the scientific issues again.

Ryan

I thank you for directing me here from Climate Abyss. I have reviewed both this article and that at Nick Stokes site and find the content convincing.

My qualifications are somewhat out of date, having studied Computation as an undergraduate at UMIST in the early ’70s, I have however, been gainfully employed in the information technology industry ever since.

In particular, I find the emphasis placed in Eric’s reviews of your paper regarding the choice of ‘kgnd’ and the subsequent public criticism somewhat hypocritical, given its implication that any other reasonable choice for ‘kgnd’ produces more warming in the Western Antarctic.

Why so?, because it would appear from Nick’s analysis that any other reasonable choice of number of PCs in S09 would produce less warming in the WA…

I anticipate a statistical response from Steig et al with great interest

“Kenneth, might this be yet another item to add to your list of stuff to run through your distance correlation scripts – pairwise correlation comparison of anarctic surface station data vs corresponding AVHRR grid points? This would definitely elucidate Ryan’s point.”

Oh, no. More work assignments from LL. Since your last assignment I have been looking at pair-wise series correlations versus distance separations for a comparison between observed (ground and satellite), modeled and proxy temperatures. I have added ARIMA modeling and breakpoint analyses. It has become an all winter’s project. I am guessing that Ryan has already done similiar series correlation versus distance with both AVHRR and ground stations for comparison.

Pairwise correlations get very long very quickly when you want to look at as many grids as I recall there are in the Anarctica AVHRR data set (n*(n-1)/2), although one would probaly break down the analysis using the three regions.

“In fact, in our concluding paragraph, we invite that very opportunity. There is something wrong with the AVHRR data.”

It is my understanding that your methods would be effected by spatial correlation instability and much of what you said could be wrong with AVHRR has to do, as I recall, with temporal instabilities and not necessarily spatial.

I do believe that we will be hearing more about reanalysis of the Antarctica temperature data and even problems with the AVHRR data. Maybe even we will see more climate modeling of Antarctica temperatures. I do recall Santer et al blowing up the climate model results for the tropical troposphere (and surface) to show that the range of model results enclosed the observed ones.

I actually had a smile on my face when I posted – but I know you don’t do smilies so I didn’t want to offend you (imagine smilie face here).

I hope you are at least enjoying your winter project. I definitely think you will have some interesting contributions to show and discuss when you are done. After some quick progress with R last fall / early winter I have gotten real lazy lately – prefering instead to live my life of scientific analysis vicariously through others.

Layman Lurker said February 17, 2011 at 2:41 pm

“Kenneth, might this be yet another item to add to your list of stuff to run through your distance correlation scripts – pairwise correlation comparison of anarctic surface station data vs corresponding AVHRR grid points?”

I did look at this question at the time. The correlations are in most cases quite reasonable, but not that high. For the 63 station data set we used, R-squared varied between pretty low and over 0.8, I think, with getting on for half the stations having a best fit R-squared of 0.5 or more.

The best fit wasn’t actually always with AVHRR grid cell in which the station wsa located. Sometimes one of the 8 surrounding grid cells gave a better fit. But we stuck with the co-located grid cells for the published reconstructions.

There is of course considerably more local temperature variation ‘noise’ in the station data than in the area averaged AVHRR data, as reflected in its higher standard deviation than the corresponding AVHRR data. And, correspondingly, some of the variance of the AVHRR data reflects local temperature fluctuations that don’t affect the ground station.

Not picking a pre-determined grid cell would smack of Mann’s CPS calibration method. Tsk. Glad you stayed on the straight and narrow.

Do you think the problems with AVHRR might have been related to cloud masking?

“There is of course considerably more local temperature variation ‘noise’ in the station data than in the area averaged AVHRR data, as reflected in its higher standard deviation than the corresponding AVHRR data. And, correspondingly, some of the variance of the AVHRR data reflects local temperature fluctuations that don’t affect the ground station.”

This hits home when one attempts to compare satellite measurements from gridded data with, for example, station data fron GHCN. An apples to apples comparison requires a grid where the station data is more or less complete. I can, however, compare apples to apples with climate models to satellite data. The Antarctica and Arctic regions are not covered completely by the satellites so there always seems to be a problem with these comparisons. Satellite to AVHRR would have been a natural had we had good satellite data that far south. On the other hand, you have GISS data that covers both poles way back in time. Unfortunately GISS simply does some major extrapolations to get that information into their data set.

Kenneth:

And that is exactly why these types of analyses are non-trivial. The details matter.

Free shipping, NFL jerseys, Football jerseys, NFL jerseys for sale, http://www.nfl-jerseyssale.com movie.

Sometimes when I reread my posts I really get upset with my lack of specificity. In the above, when I referred to satellite data (MSU), I meant like that measured and adjusted by UAH and RSS. Obviously AVHRR also comes by way of satellite.

Kenneth:

It’s okay, man . . . I understood what you were getting at. There are a lot of things about satellite data (and ground data) that seem to be taken for granted, and care is required to ensure that something is not assumed to be true that turns out to be false. Small differences can have a significant impact on results. It doesn’t matter whether its AVHRR or MSU . . . many of the same principles apply.

Ryan,

I much admire the significant statistical work that you and colleagues have done to refute Steig’s flawed work, thus hopefully limiting the damage of the resulting misplaced warmest euphoria. However, reading this blog trail, I have to say that discussing how many principal components to choose etc., etc., etc., does smack a little of discussing how many angels can dance on a pinpoint!

Surely the real lesson for ordinary intelligent non-doctrinaire people is that the data is rubbish.

Just look at the spatial temperature inhomogeneity throughout Antarctica, not just in the West. Almost all the 20 or 30 weather stations are situated round the edge and (surprise, surprise) they show relatively warm levels (typically minus 5 to minus 10degC) and mainly show positive warming trends. This is doubtless because they are largely measuring the effects of the adjacent (relatively) warm ocean waters. On the other hand, Amundsen Scott, one of the only two weather stations far into the interior of that great continent (it is actually located at the South Pole) shows an average annual temperature of minus 49degC. The other interior station, Vostok, shows minus 55degC.

It is quite clear to me from the geography that only the coastal fringe is (relatively) warm with the whole vast interior permanently much colder. Even leaving aside the embarrassing lack of regularity in surface station positioning, this is hardly a homogeneous and gently undulating temperature landscape to interpolate across!

In any other scientific endeavour, both sides would admit the above obvious uncertainties and the pressure would then be directed towards spending climate science dollars on far better instrumentation to verify a very obvious hypothesis.

The comment about whether 3 or 4 PCs would have changed anything is irrelevant. The only relevant question to ask is “How many PCs would be required to properly capture the geographic distribution of temperature trends?” with the followup question of, “Does this number of PCs result in inclusion of an undesirable magnitude of mathematical artifacts and noise?”

If the answer to the followup is “yes”, then the conclusion is that the analysis is not powerful enough to geographically discriminate temperature trends on the level claimed in the paper. It doesn’t mean the authors did anything wrong or inappropriate in the analysis . . .

There were a lot of individual issues addressed in O10. The blending of sat info with temp station info was also addressed. Nick Stokes recent post was very predictable for me. It isn’t as though the methods are astronomically complex, but they are uniquely strange. The Sat trends are bad, the sat variance (short term) is reasonably good. PC’s are one issue, but the way the information is combined is another.

O10 worked to remove the problematic info from the rest. The station info was truncated for noise and then fit to sat spatial info – also truncated for noise. S09 was simply mashed together.

lalalalallaa

hi people, can someone please tell me what means the word ladbrookes in french?